The sheer volume of AI-generated content being churned out every second can make it feel impossible to keep up. But rushing your AI content workflow often leads to vague, uninspired, or obviously AI-written results. The real challenge is Finding the best LLM and workflow to create content that truly stands out. But with so many options available, how do you find the best LLM for your specific content needs?

In this article, we will test and rank the leading LLMs for content creation, providing an overview of popular models and their writing abilities. We’ll perform a short blog writing test in the FlowHunt environment, ranking models on tone and language, readability, and keyword usage.

Understanding Large Language Models (LLMs)

Large Language Models (LLMs) are cutting-edge AI tools that reshape how we create and consume content. Before we go deeper into the differences between individual LLMs, you should understand what allows these models to create human-like text so effortlessly.

LLMs are trained on huge datasets, which helps them grasp context, semantics, and syntax. Based on the amount of data, they can correctly predict the next word in a sentence, piecing the words into understandable writing. One reason for their effectiveness is the transformer architecture. This self-attention mechanism uses neural networks to process text syntax and semantics. This means LLMs can handle a wide range of complex tasks with ease.

Importance of LLMs in Content Creation

Large Language Models (LLMs) have transformed the way businesses approach content creation. With their ability to produce personalized and optimized text, LLMs generate content like emails, landing pages, and social media posts using human language prompts.

Here’s what LLMs can help content writers with:

- Speed and Quality: LLMs offer fast and high-quality content production. This allows even smaller businesses without dedicated writing staff to stay competitive.

- Innovation: Pre-loaded with thousands of effective examples, LLMs help with marketing brainstorming and customer engagement strategies.

- Wide Range of Content: LLMs can effectively create diverse content types, from blog posts to whitepapers.

- Creative Writing: LLMs help narrative development by analyzing existing narratives and suggesting plot ideas.

Moreover, the future of LLMs looks promising. Advancements in technology are likely to improve their accuracy and multimodal capabilities. This expansion of applications will influence various industries significantly.

Overview of Popular LLMs for Writing Tasks

Here’s a quick look at the popular LLMs we will be testing:

| Model | Unique Strengths |

|---|---|

| GPT-4 | Versatile in various writing styles |

| Claude 3 | Excels in creative and contextual tasks |

| Llama 3.2 | Known for efficient text summarization |

| Grok | Known for focus on a laid-back and humorous tone |

When choosing an LLM, it’s essential to consider your content creation needs. Each model offers something unique, from handling complex tasks to generating AI-driven creative content. Before we test them, let’s briefly summarize each to see how it can benefit your content creation process.

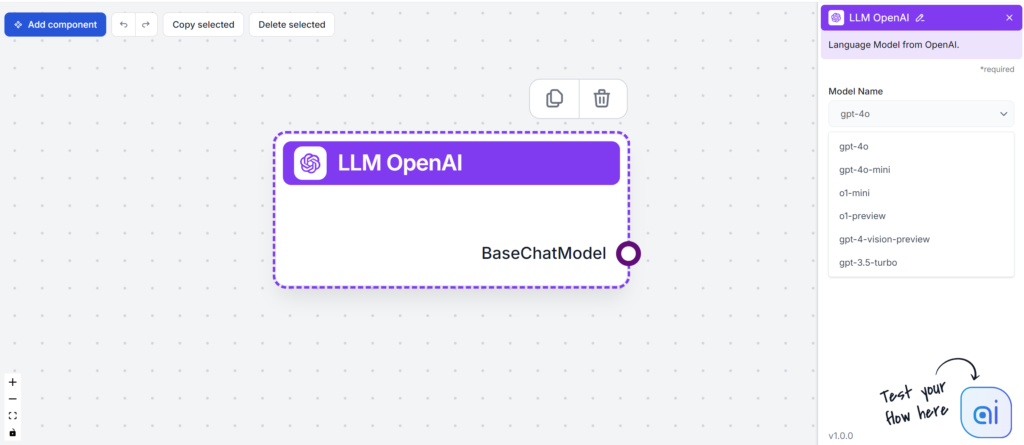

OpenAI GPT-4: Features and Performance Review

GPT-4, developed by OpenAI, is the latest and most advanced model in the Generative Pre-trained Transformer series. It features many parameters, rumored to exceed 170 trillion, which enhances its ability to generate coherent and contextually relevant text.

Key Features:

- Multimodal Capabilities: GPT-4 can process and generate text and images unlike its predecessors.

- Contextual Understanding: The model understands complex prompts, allowing for nuanced responses tailored to specific contexts.

- Customizable Outputs: Users can specify tone and task requirements through a system message, making it versatile for various applications.

Performance Metrics:

- High-Quality Outputs: GPT-4 is particularly effective in creative writing, summarization, and translation tasks, delivering results that often meet or exceed human standards.

- Real-World Application: In a practical setting, a digital marketing agency utilized GPT-4 for personalized email campaigns, leading to a 25% increase in open rates and a 15% boost in click-through rates.

Strengths:

- Coherence and Relevance: The model consistently produces text that is coherent and contextually appropriate, making it a reliable choice for content creation.

- Extensive Training: Its training on diverse datasets allows for fluency in multiple languages and a broad understanding of various topics.

Challenges:

- Computational Demands: The high resource requirements may limit accessibility for some users.

- Potential for Verbosity: At times, GPT-4 may generate overly verbose and vague responses.

Overall, GPT-4 is a powerful tool for businesses looking to enhance their content creation and data analysis strategies.

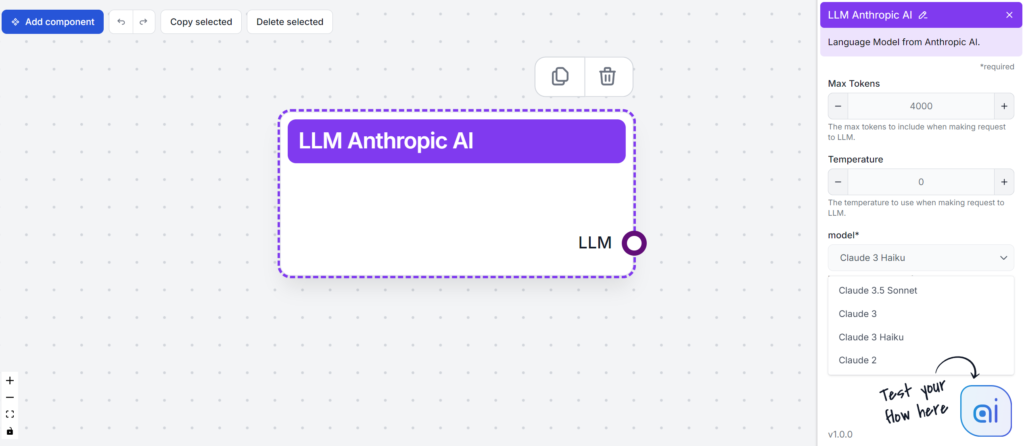

Anthropic Claude 3: Features and Performance Review

Claude 3 is an increasingly fan-favorite language model known for being the leader in autonomous agents. Still, the core idea that made many fall in love with Claude is the greater attention to data ethics and privacy. Claude 3 offers three models: Opus, Sonnet, and Haiku. If you’re at all familiar with these poetry genres, you’ll quickly understand which model is the most advanced.

Key Features:

- Contextual Understanding: Claude 3 excels at maintaining coherence and consistency across lengthy narratives, adapting its language to fit specific contexts.

- Emotional Intelligence: The model can analyze emotional undertones, creating content that resonates with readers and captures complex human experiences.

- Genre Versatility: Claude 3 can seamlessly write across various genres, from literary fiction to poetry and screenwriting.

Strengths

- Imaginative Creativity: Unlike many language models, Claude 3 generates original ideas and storylines, pushing the boundaries of traditional storytelling.

- Engaging Dialogue: The model produces authentic and relatable dialogue, enhancing character development and interaction.

- Collaborative Tool: Claude 3 allows writers to collaborate.

Challenges

- Internet access: Unlike other current leading models, Claude cannot access the internet.

- Text generation only: While the competition introduces models to create image, video, and voice content, Anthropic’s offering remains strictly limited to text generation.

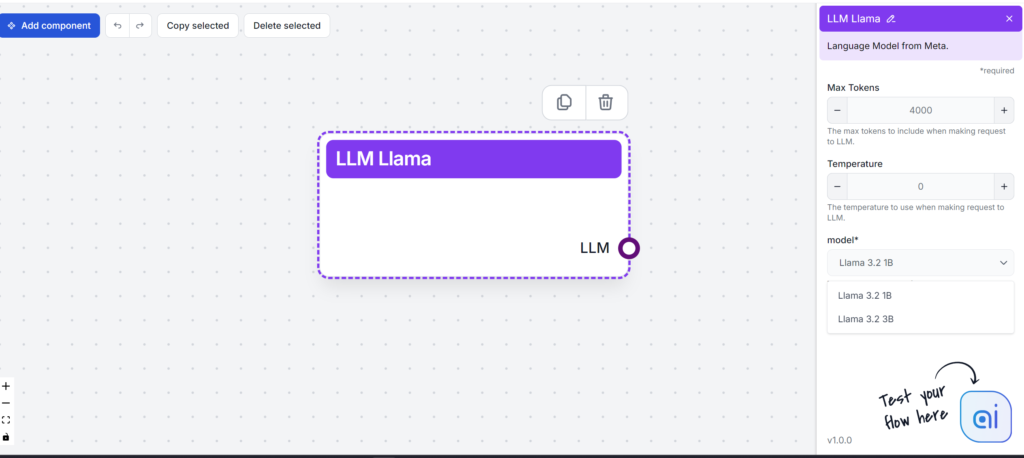

Meta Llama 3: Features and Performance Review

Llama 3, developed by Meta, represents a significant advancement in open-source large language models (LLMs) designed to facilitate various natural language processing tasks. Released on July 23, 2024, Llama 3 generates interest for its powerful capabilities and versatility.

Key Features

- Parameter Variants: Available in sizes of 8 billion, 70 billion, and an impressive 405 billion parameters.

- Extended Context Length: Supports up to 128,000 tokens, enhancing performance on long and complex texts.

Strengths

- Open-Source Accessibility: Available for free, encouraging widespread use and experimentation for research and commercial applications.

- Synthetic Data Generation: The 405 billion parameter model excels in generating synthetic data, which is beneficial for training smaller models and knowledge distillation.

- Integration Across Applications: Powers AI features in Meta’s applications, making it a practical tool for businesses looking to scale generative AI solutions.

Challenges

- Resource Intensity: Larger models may require significant computational resources, limiting accessibility for smaller organizations.

- Bias and Ethical Considerations: As with any AI model, there remains a risk of inherent biases, necessitating ongoing evaluation and refinement.

Llama 3 stands out as a robust and versatile open-source LLM, promising advancements in AI capabilities while also presenting certain challenges for users.

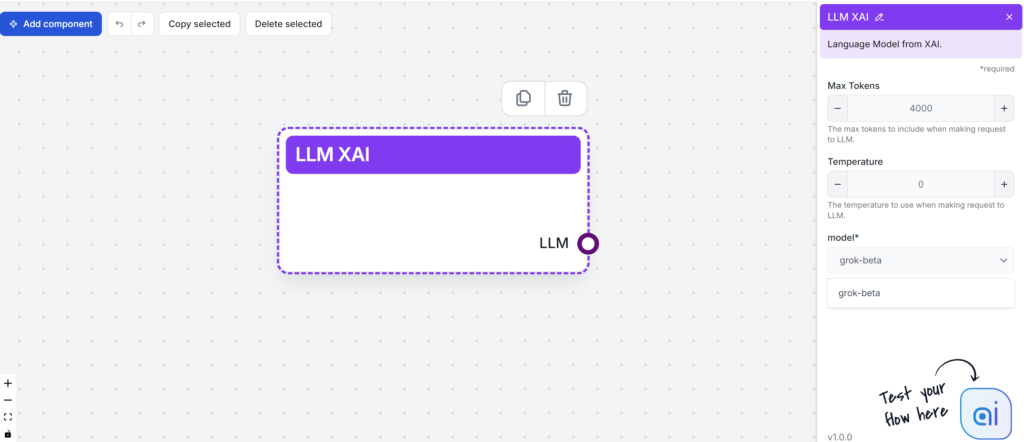

xAI Grok: Features and Performance Review

The Grok model, developed by Elon Musk’s xAI, is an AI model and chatbot designed to utilize data from X (formerly Twitter). Although not as prominent as other models in the landscape, it garners media attention due to its association with Musk and the ongoing discourse around AI. Another eye-grabbing feature is the “Fun mode,” which focuses on casual, humorous replies with fewer guidelines.

Key Features

- Data Source: Trained on content from X (formerly Twitter).

- Context Window: Capable of processing up to 128,000 tokens.

Strengths

- Integration Potential: xAI can be integrated into social media platforms, enhancing user interactions.

- User Engagement: Designed for casual conversational applications.

Challenges

- Unknown Parameters: Lack of transparency regarding its model size and architecture hampers performance assessment.

- Comparative Performance: Does not consistently outperform other models in terms of language tasks and capabilities.

In summary, while xAI Grok provides interesting features and has the advantage of media visibility, it faces significant challenges in popularity and performance within the competitive landscape of language models.

Testing The Best LLMs for Blog Content Writing

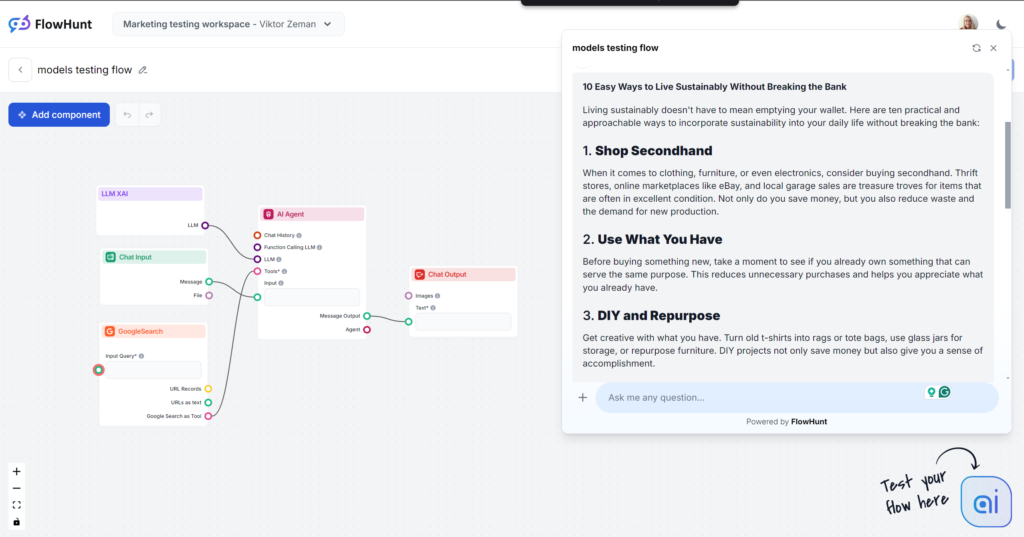

Let’s jump right into the testing without further ado. We’ll rank the models using a basic blog writing output. We’ll test all of this in FlowHunt, only changing the LLM models.

In this task, we will focus mainly on:

- Readability

- Tone consistency

- Originality of language

- Keyword usage

Our test prompt is very simple, setting the tone and only lightly mentioning the structure. Of course, a more detailed prompt or an entire SEO outline would work much better. But for the things we’re analyzing, this will do just fine:

Write a blog post titled "10 Easy Ways to Live Sustainably Without Breaking the Bank."

The tone should be practical and approachable, with a focus on actionable tips that are realistic for busy individuals. Highlight "sustainability on a budget" as the main keyword. Include examples for everyday scenarios like grocery shopping, energy use, and personal habits. Wrap up with an encouraging call-to-action for readers to start with one tip today.Note: The Flow is limited to only create output of approximately 500 words. If you feel the outputs are rushed or don’t go in-depth, this is on purpose.

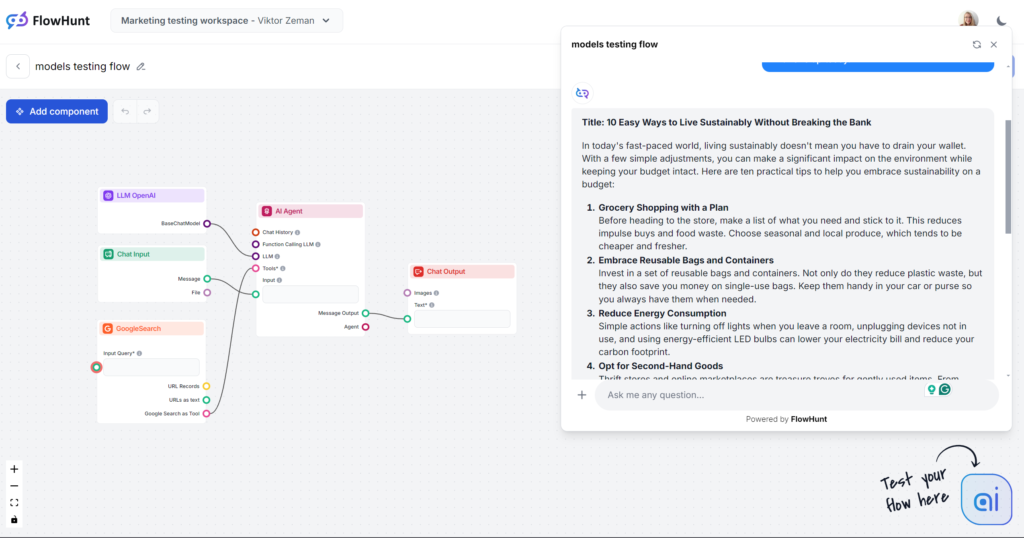

OpenAI GPT-4o

If this were a blind test, the “In today’s fast-paced world…” opening line would tip you off immediately. You’re likely quite familiar with this model’s writing, as it’s not only the most popular choice but also the core of most third-party AI writing tools. GPT-4o is always a safe choice for general content but be prepared for vagueness and wordiness.

Tone and Language

Looking past the painfully overused opening sentence, GPT-4o did exactly what we expected. You wouldn’t fool anyone that a human wrote this, but it’s still a decently structured article and it undeniably follows our prompt. The tone really is practical and approachable, immediately focusing on actionable tips instead of vague rambling.

Keyword usage

GPT-4o faired well in the keyword usage test. Not only did it successfully use the provided main keyword, but it also used similar phrases and other fitting keywords.

Readability

On a Flesch-Kincaid scale, this output ranks as 10th-12th grade (fairly difficult) with a score of 51.2. A point lower, it would rank at the college level. With such a short output, even the keyword “sustainability” itself probably has a noticeable effect on the readability. That being said, there’s certainly loads of room for improvement.

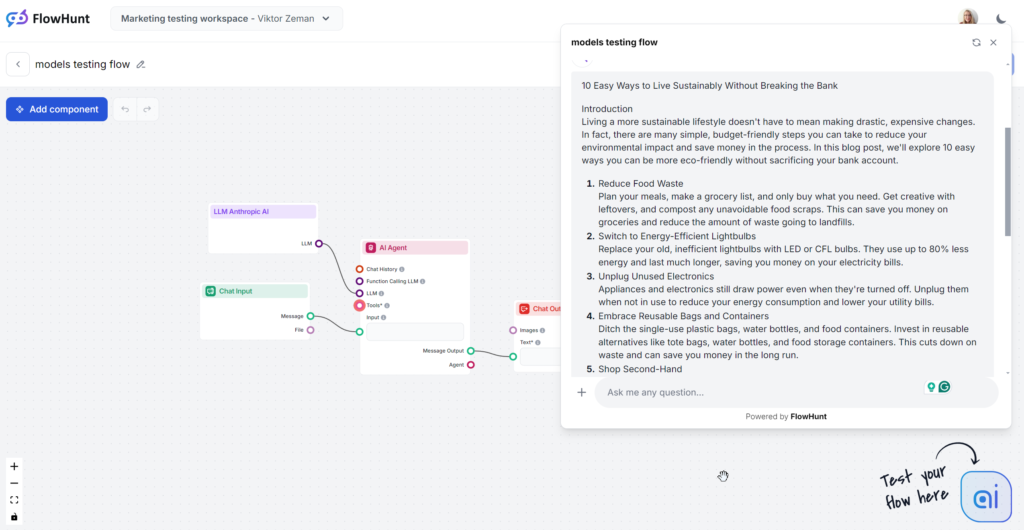

Anthropic Claude 3

The analyzed Claude output is the mid-range Sonnet model rumored to be the best route for content. The content reads well and is noticeably more human than GPT-4o or Llama. Claude is the perfect solution for clean and simple content to deliver information efficiently without being too wordy like GPT or flashy like Grok.

Tone and Language

One of the things that makes Claude different is the simple, relatable, and human-like answers. Just see the introductory sentences. They’re nothing special, but they sound much more human and relatable than GPT or Llama. The tone really is practical and approachable, immediately focusing on actionable tips instead of vague rambling.

Keyword usage

Claude was the only model to ignore the keyword part of the prompt, using it only in 1 out of 3 outputs. When it included a keyword, it did so in the conclusion, and the usage felt somewhat forced.

Readability

Claude’s Sonnet scored high on the Flesch-Kincaid scale, ranking in 8th & 9th grade (Plain English), just a couple of points behind Grok. While Grok shifted the whole tone and vocabulary to achieve this, Claude used a similar vocabulary to GPT-4o. What made the readability so good, then? Shorter sentences, everyday words, and no vague content.

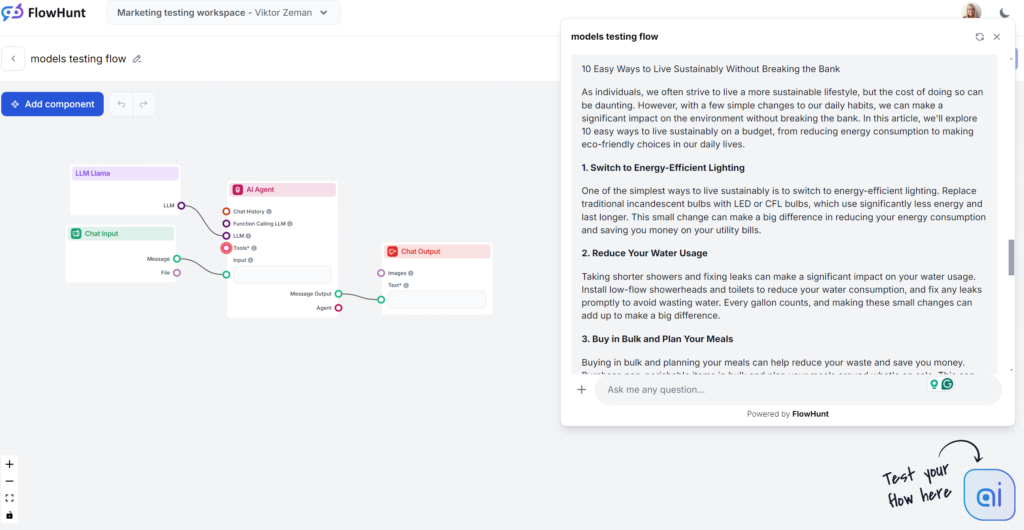

Meta Llama

Llamas’s strongest point was the keyword usage. On the other hand, the writing style was uninspired and a bit wordy, but still less boring than GPT-4o. Llama is like GPT-4o’s cousin – a safe content choice with a slightly wordy and vague writing style. It’s a great choice if you generally like the writing style of OpenAI models but want to skip the classic GPT phrases.

Tone and Language

Llama-generated articles read a lot like the ones from GPT-4o. We don’t find the same tell-tale phrases, but the wordiness and vagueness is comparable. That said, the tone can be called practical and approachable, immediately focusing on actionable tips instead of rambling.

Keyword usage

Meta is the winner in the keyword usage test. The prompt only asked to use the keyword and did not specify how. Llama used it more than once, including in the introduction. On top of it, it very naturally used similar phrases and other fitting keywords.

Readability

On a Flesch-Kincaid scale, this output ranks as 10th-12th grade (fairly difficult), scoring 53.4, just slightly better than GPT-4o (51.2). With such a short output, even the keyword “sustainability” itself probably has a noticeable effect on the readability. That being said, there’s certainly room for improvement.

xAI Grok

Grok was a huge surprise, especially in tone and language. With a very natural and laid-back tone, it felt like you were getting some quick tips from a close friend. If laid-back and snappy is your style of writing, Grok is definitely the choice for you.

Tone and Language

The output reads very well. The language is natural, sentences are snappy, and Grok uses idioms well. The model stays true to its primary tone and pushes the envelope on human-like text. That being said, we did other tests, and Grok’s laid-back tone isn’t always a good choice for B2B and SEO-driven content, as this tone might be a bit out of place.

Keyword usage

Grok used the keyword we asked for, but only in the conclusion. To be fair, our prompt asked for a single keyword without specifying anything else. Grok passed this test as well, just not quite as good as other models. Other models did better keyword placement and added other relevant keywords, while Grok focused more on the flow of language.

Readability

With the easy-going language, Grok passed the Flesch-Kinkaid test with flying colors. It scored 61.4, which falls in the 7th-8th grade (plain English) territory. It’s the optimal spot for making topics accessible to the general population. This great leap in readability is almost tangible. Just go over the output on the screenshot. It reads so much better than other models and feels very natural.

Ethical Considerations in Using LLMs

The power of LLMs hinges on the quality of training data, which can sometimes be biased or inaccurate, leading to the spread of misinformation. It is vital to fact-check and vet AI-generated content for fairness and inclusivity. When experimenting with various models, you also need to remember that each model has a different approach to input data privacy and limiting harmful output.

To guide ethical use, organizations must establish frameworks addressing data privacy, bias mitigation, and content moderation. This includes regular dialogue between AI developers, writers, and legal experts. Consider this list of ethical concerns:

- Bias in Training Data: LLMs can perpetuate existing biases.

- Fact-Checking: Human oversight is necessary to verify AI outputs.

- Misinformation Risks: AI can generate plausible-sounding falsehoods.

The choice of LLMs should align ethically with an organization’s content guidelines. Especially open-source LLMs, but also proprietary models with looser guidelines should be evaluated for potential misuse.

Limitations of Current LLM Technology

Bias, inaccuracy, and hallucinations remain major issues with generated AI content. Thanks to the built-in guidelines, this often results in very vague low-value output of LLMs. Businesses often need extra training and security measures to address these issues. Spending time and resources on training is often inconceivable to small businesses. An alternative option is adding these capabilities by using general models via third-party tools like FlowHunt.

FlowHunt allows you to give specific knowledge, internet access, and new capabilities to classic base models. This way, you can choose the right model for the task without base model limitations or countless subscriptions.

Another major issue is the complexity of these models. With billions of parameters, they can be tricky to manage, understand, and debug. FlowHunt gives you much more control than giving plain prompts to chat ever could. You get to add individual capabilities as blocks and tweak them to create your library of ready-to-go AI Tools.

The Future of LLMs in Content Writing

The future of language models (LLMs) in content writing is promising and exciting. As these models advance, they promise greater accuracy and less bias in content generation. This means writers will get to produce reliable, human-like text with AI-generated content.

LLMs will not only handle text but also become proficient in multimodal content creation. This includes managing both text and images, boosting creative content for diverse industries. With larger and better-filtered datasets, LLMs will craft more dependable content and refine writing styles.

But for now, LLMs can’t do that on their own, and these capabilities are divided among various companies and models, each fighting for your attention and money. FlowHunt brings them all together and lets you benefit from the capabilities within a single subscription.

Conclusion: Choosing the Right LLM for Your Needs

In conclusion, GPT-4o remains the most popular choice. It always delivers despite its many shortcomings. If you like the way GPT writes, but there’s only so many “In today’s fast-paced world…” introductions you can take, then Meta’s Llama is for you. It writes very similarly, but the choice of words is a bit fresher.

If you’re looking for simple, clean, no-fluff content, try Anthropic’s Claude 3. It reads well but doesn’t step out of line too much. If you’re looking for something bolder and laid-back, give xAI’s Grok a try, but be wary of the lowered guidelines and the possibility of misinformation from unvetted X data.

All of these tips to pick the best model are easier said than done if you don’t get a hands-on feel for how these models create content. Try them all at once with a single subscription. Gain superior control over what and how is generated with FlowHunt. Try it now!