In this blog we will explore how you can create chatbots and assistant that can be intelligent in answering Questions efficiently without any hallucination. Specifically we will create a RIG (Retrieval Interleaved Generation) chatbot, which was recently introduced by research from Google. In this approach LLM will find out how to fact-check its own response. We will customize this approach specifically for your own documents instead of using Data Commons which was proposed in the original paper.

What is RIG (Retrieval Interleaved Generation)?

Retrieval Interleaved Generation, or RIG for short, is a cutting-edge AI method that smoothly combines finding information and creating answers. In the past, AI models used RAG (Retrieval Augmented Generation) or generation, but RIG merges these processes to enhance AI accuracy. By weaving together retrieval and generation, AI systems can tap into a wider base of knowledge, offering more precise and relevant responses. The main aim of RIG is to reduce mistakes and improve the trustworthiness of AI outputs, making it an essential tool for developers who want to fine-tune AI accuracy. Thus, Retrieval Interleaved Generation comes as an alternative to RAG (Retrieval Augmented Generation) for generating AI-powered answers based on a context.

How does RIG (Retrieval Interleaved Generation) work?

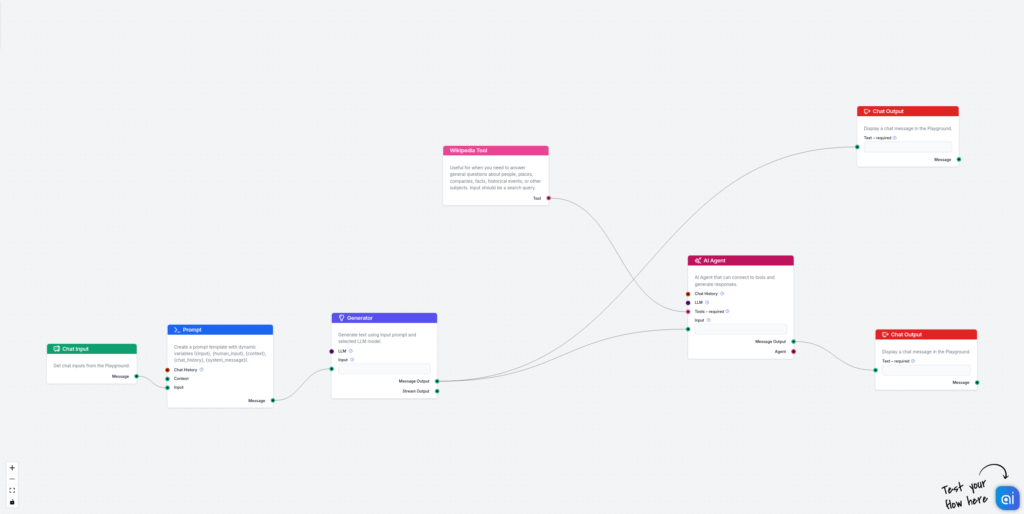

Here is how RIG work. The following stages is inspired by the original blog, which focuses more on General use cases, using Data Commons API. However, in most use cases, there is a need that you want to use both a general knowledge base (eg. Wikipedia or Data Commons) in addition to your own data. This is how you can use power of flows in FlowHunt to make a RIG chatbot from your own knowledge base and a general knowledge base like Wikipedia.

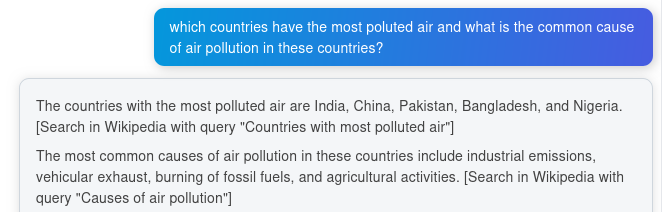

First User query is fed to a generator, which generates a sample answer with a citation of corresponding sections. In this stage, the Generator might even generate a good question but hallucinated with wrong data and statistics

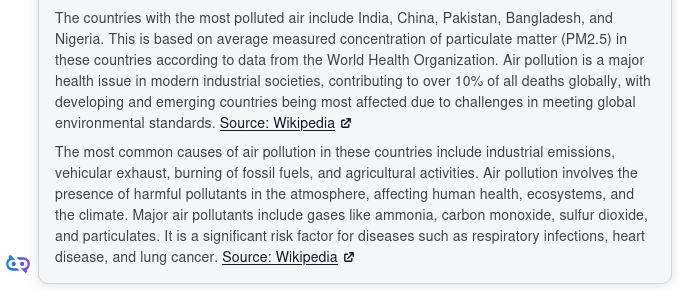

But that’s okay, in the next phase, we will use an AI Agent which receives exactly this output and refines the data in each section by connecting to each section in Wikipedia and additionally, it adds source in each corresponding section.

As you can see, this method enhances Chatbot’s accuracy significantly and its a good way to ensure each generated section, has a source and is grounded based on truth 😉

How to Create RIG Chatbot in FlowHunt?

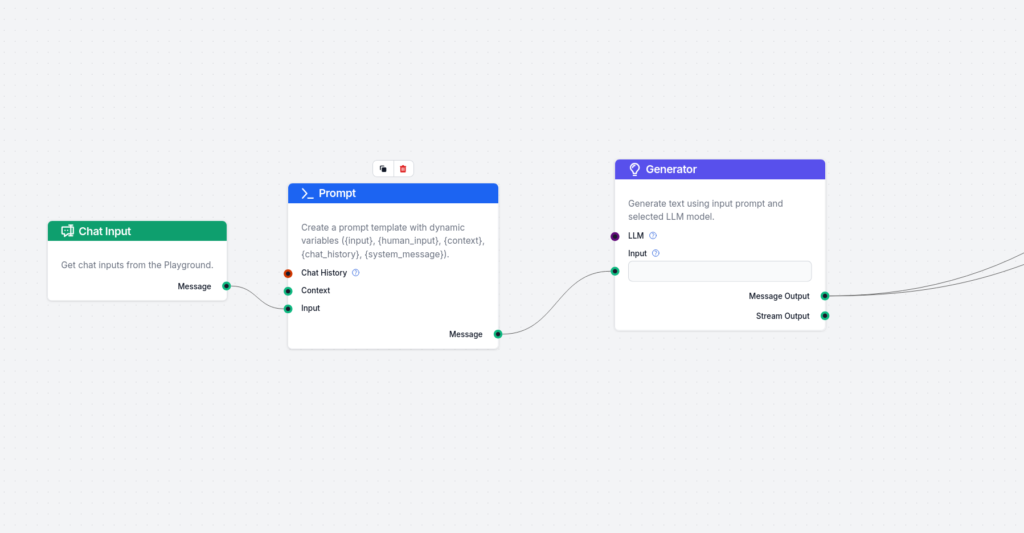

Add the first stage (dumb sample answer generator): The first part of the flow consists of Chat input, a prompt template and a generator. simply connect them together. The most important part, is the prompt template. I have used the following:

Gived is user's query. Based on the User's query generate best possible answer with fake data or percentage. After each of different sections of your answer, include data which source to use in order to fetch the correct data and refine that section with correct data. you can either specify to choose Internal knowledge source to fetch data from in case there is custom data to user's product or service or use wikipedia to use as general knowledge source.

---

Example Input: Which countries are top in terms of renewable energy and what is the best metric for measuring this and what is that measure for top country?

Example output: The top countries in renewable energy are Norway, Sweden, Portugal, USA [Search in Wikipedia with query "Top Countries in renewable Energy"], the usual metric for renewable energy is Capacity factor [Search in Wikipedia with query "metric for renewable energy"] and number one country has 20% capacity factor [search in Wikipedia "biggest capacity factor"]

---

Let's begin now!

User Input: {input} Here, we used Few Shot prompting to make the Generator output exactly the output that we want it to output.

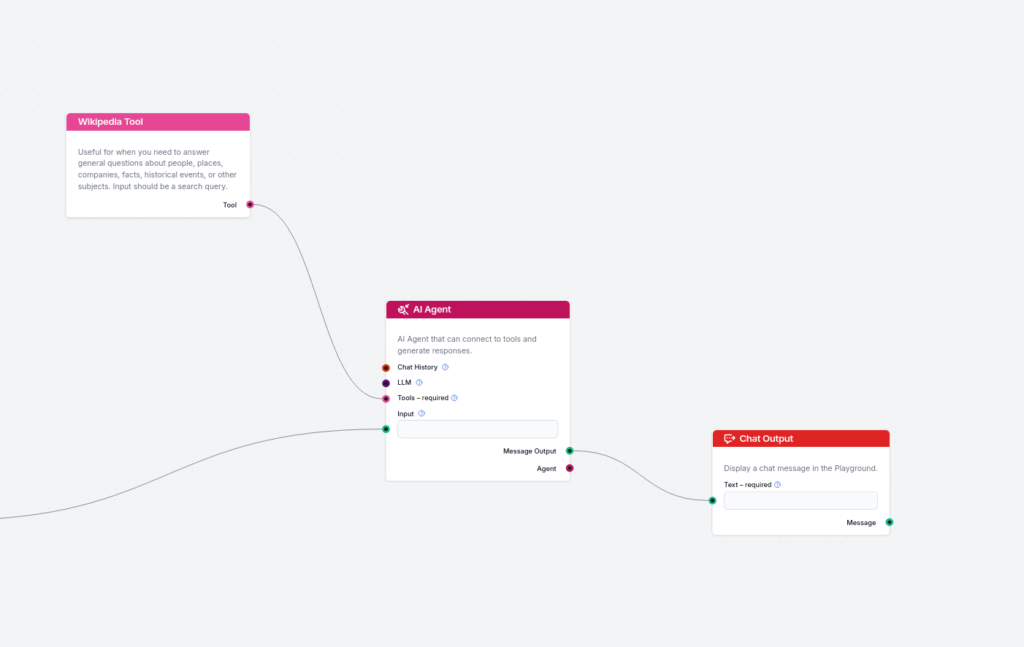

Add the Fact-check part: Now, we will add the second part, which fact-checks the output of the sample answer and refines the answer based on real source of truth. Here, we use Wikipedia and AI Agents, since its easier to connect Wikipedia to AI Agents and it gives a good flexibility comparing to simple Generators. connect the output of generator to AI Agent and connect Wikipedia tool to the AI Agent. Here is the Goal I use for AI Agent:

You are given a sample answer to user's question. The sample answer might include wrong data. use wikipedia tool in the given sections with the specified query to use wikipedia's information to refine the answer. include the link of wikipedia in each of the sections specified. FETCH DATA FROM YOUR TOOLS AND REFINE THE ANSWER IN THAT SECTION. ADD THE LINK TO THE SOURCE IN THAT PARTICULAR SECTION AND NOT IN THE END.In the same way, you can add Document Retriever to AI Agent, which can connect to your own custom knowledge base to retrieve Documents.

You can try this exact flow here 😁

Understanding Retrieval-Augmented Generation (RAG)

To truly appreciate RIG, it helps to first look at its predecessor, Retrieval-Augmented Generation (RAG). RAG merges the strengths of systems that fetch relevant data and models that generate coherent and suitable content. The shift from RAG to RIG is a big step forward. RIG not only retrieves and generates, but it also mixes these processes for better accuracy and efficiency. This allows AI systems to improve their understanding and output in a step-by-step manner, delivering results that are not only accurate but also relevant and insightful. By blending retrieval with generation, AI systems can draw on vast amounts of information while keeping their responses coherent and relevant.

The Future of Retrieval Interleaved Generation

The future of Retrieval Interleaved Generation looks promising, with many advancements and research directions on the horizon. As AI continues to grow, RIG is set to play a key role in shaping the world of machine learning and AI applications. Its potential impact goes beyond current capabilities, promising to transform how AI systems process and generate information. With ongoing research, we expect further innovations that will enhance the integration of RIG into various AI frameworks, leading to more efficient, accurate, and reliable AI systems. As these developments unfold, the importance of RIG will only increase, cementing its role as a cornerstone of AI accuracy and performance.

In conclusion, Retrieval Interleaved Generation marks a major step forward in the quest for AI accuracy and efficiency. By skillfully blending retrieval and generation processes, RIG enhances the performance of Large Language Models, improves multi-step reasoning, and offers exciting possibilities in education and fact-checking. Looking ahead, the ongoing evolution of RIG will undoubtedly drive new innovations in AI, solidifying its role as a vital tool in the pursuit of smarter, more reliable artificial intelligence systems.

ChatGPT with Internal Knowledge

Integrate ChatGPT-4o with your docs for precise, context-rich support. Boost customer service and team efficiency. Try it now!

Building a Readability Evaluator in FlowHunt

Create a powerful readability evaluator with FlowHunt. Master industry-standard metrics to enhance your content's impact.