OpenAI just released a new model called OpenAI O1 from the O1 series of models. The main architectural change in these models, is the ability to think before it can answer user’s query. In this blog, we will dive deep in to the key changes in OpenAI O1, what new paradigms are these models using and how this new model can increase RAG accuracy significantly. We will compare a simple RAG flow using OpenAI GPT4o and OpenAI o1 model. So Let’s get into it.

How is OpenAI O1 different than previous models?

Large-Scale Reinforcement Learning

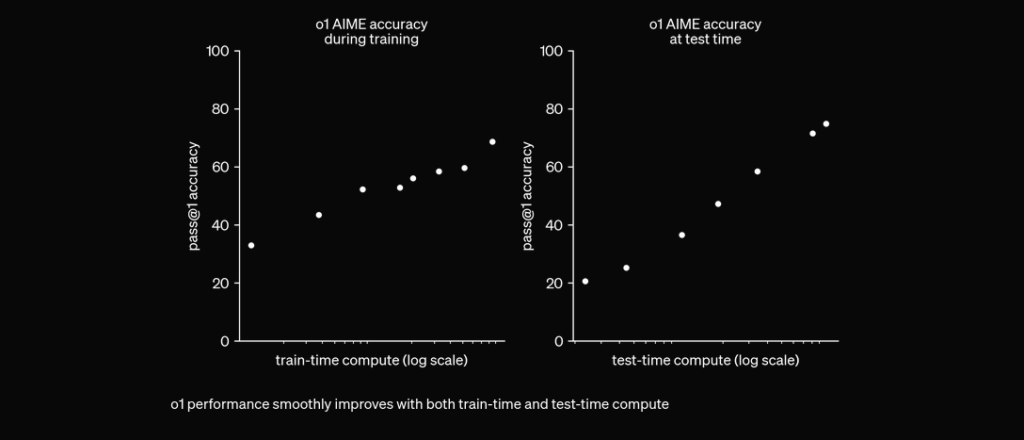

The o1 model leverages large-scale reinforcement learning algorithms during its training process. This approach enables the model to develop a robust “Chain of Thought,” allowing it to think more deeply and strategically about problems. By continuously optimizing its reasoning pathways through reinforcement learning, the o1 model significantly improves its ability to analyze and solve complex tasks efficiently.

Chain of Thought Integration

Previously, chain of thought has proven to be a useful prompt engineering mechanism to make LLM think by itself and answer complex questions. in a step by step plan. With O1 models, this step comes out of the box and its integrated natively into the model, in inference time which makes it useful to solve mathematical and coding problem-solving tasks.

o1 is trained with RL to “think” before responding via a private chain of thought. The longer it thinks, the better it does on reasoning tasks. This opens up a new dimension for scaling. We’re no longer bottlenecked by pretraining. We can now scale inference compute too. pic.twitter.com/niqRO9hhg1

— Noam Brown (@polynoamial) September 12, 2024

Superior Benchmark Performance

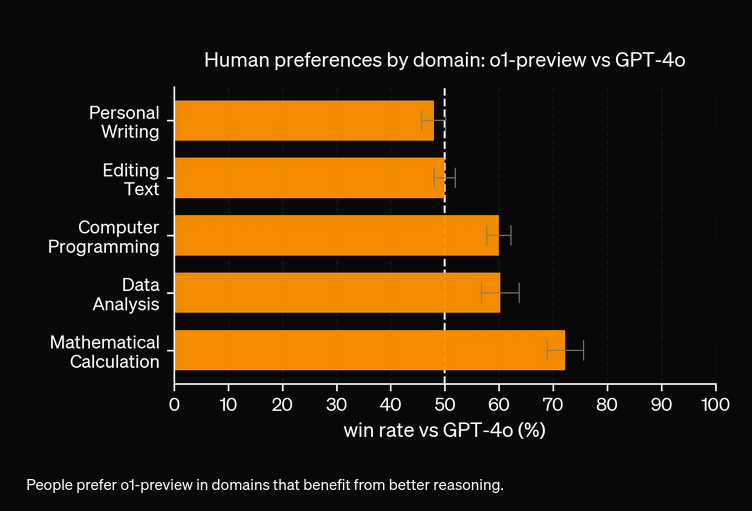

In extensive evaluations, the o1 model has demonstrated remarkable performance across various benchmarks:

- AIME (American Invitational Mathematics Examination): Solves 83% of problems correctly, a substantial improvement over GPT-4o’s 13%.

- GPQA (Expert-Level Test in Sciences): Surpasses PhD-level experts, marking the first AI model to outperform humans on this benchmark.

- MMLU (Multi-Task Language Understanding): Excels in 54 out of 57 subcategories, achieving 78.2% performance with visual perception enabled.

- Coding Competitions: Achieves high rankings in platforms like Codeforces, outperforming 93% of human competitors.

OpenAI O1 vs OpenAI GPT4o in RAG Flow

To test the performance accuracy of OpenAI O1 and OpenAI GPT4o, we have created two identical flows, but with two different LLMs to test the accuracy. We will compare the Question-answering capability of two models on two sources indexed regarding the technical report of OpenAI O1 and we’ll see how it can answer.

First, we’ll make a simple RAG flow in FlowHunt. It consists of Chat Input, Document Retriever which fetches the relevant documents, Prompt, Generator and Chat Ouput. Notice that I have added LLM OpenAI component, since I want to specify my model specifically. Otherwise, it will use GPT4o by default. Now Let’s see the results:

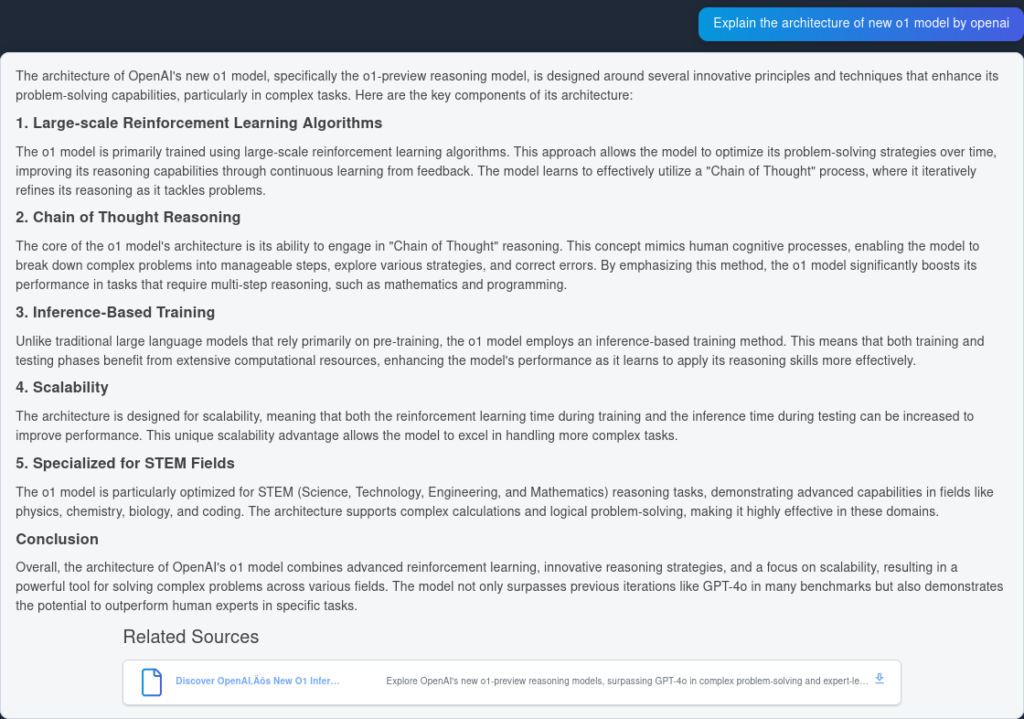

Here is the response from GPT4o

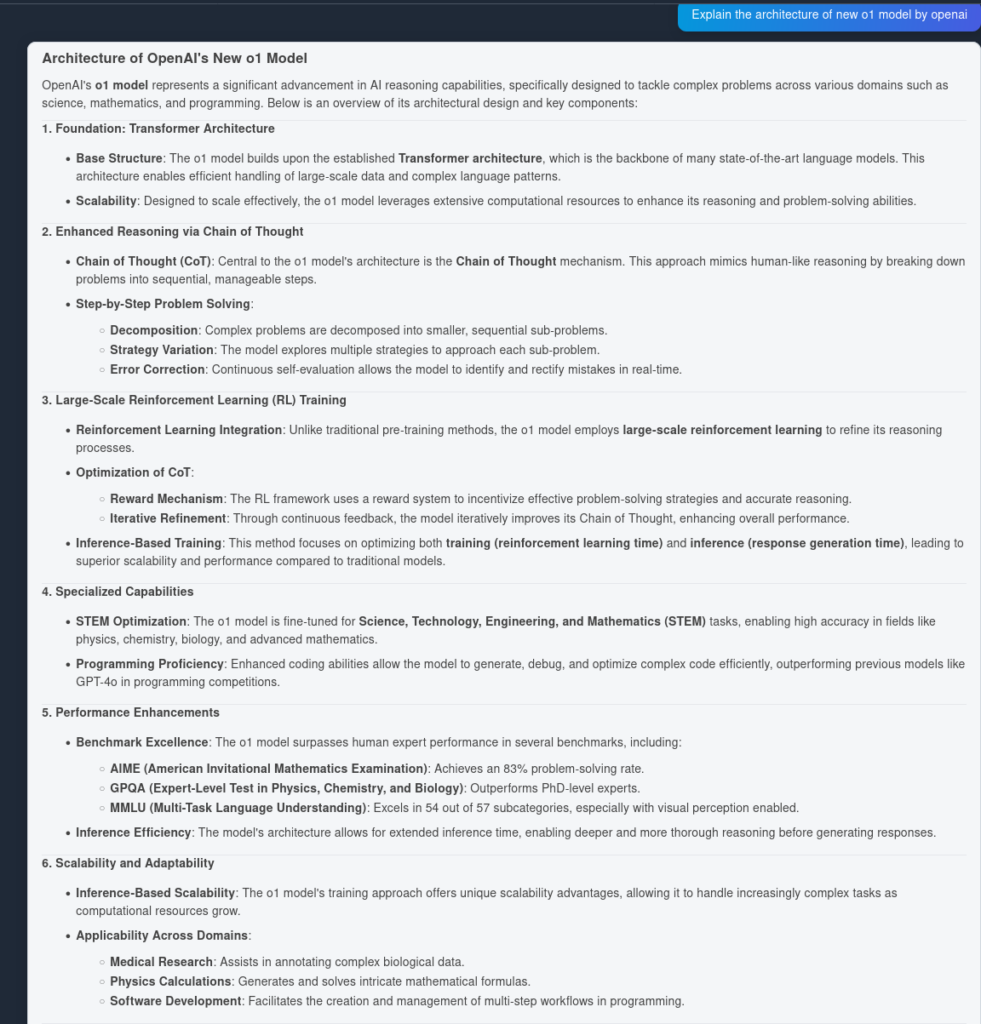

and here is the result from OpenAI O1

As you can see, OpenAI O1 model captured more architectural advantages from the article itself, 6 points as opposed to mentioned 4 points. In addition, OpenAI O1 model makes logical implications from each point, enriching the document with more insights why would the architectural change be useful.

Does OpenAI O1 model worth it?

As from our experiments, the O1 model would cost more in the expense of more accuracy. The new model has 3 types of tokens: Prompt Token, Completion Token and Reason token(newly added type of token), which can make it costly. In most cases, OpenAI O1 model can give answers which seem more helpful if grounded by truth. That being said, there are some instances where GPT4o model outperforms OpenAI O1 model. some tasks simply don’t need reasoning 🤷

Next steps with O1 models

We are specifically excited to use these models for AI Agents. O1 models can enhance the reasoning of AI Agents which can make them perfect for solving tasks in bug teams and businesses. However, the models reasoning can be improved dramatically.

How AI Agents Like GPT-o1 Mini Think

Explore how AI agents like GPT-o1 Mini think, with insights on project management fundamentals and sales calculations.

The Wait for GPT-5 Explained: Exploring OpenAI’s decision to focus on o1 models

Explore why OpenAI delays GPT-5 to enhance o1 models, focusing on safety, reliability, and future AI advancements.