Different tasks call for different models, and FlowHunt is committed to giving you the best of AI without the need to sign up for countless subscriptions. Instead, you get dozens of text and image generator models in a single dashboard. The LLM type of components houses text generation models grouped by provider, allowing you to swap AI models on the fly.

What is the LLM Open AI component?

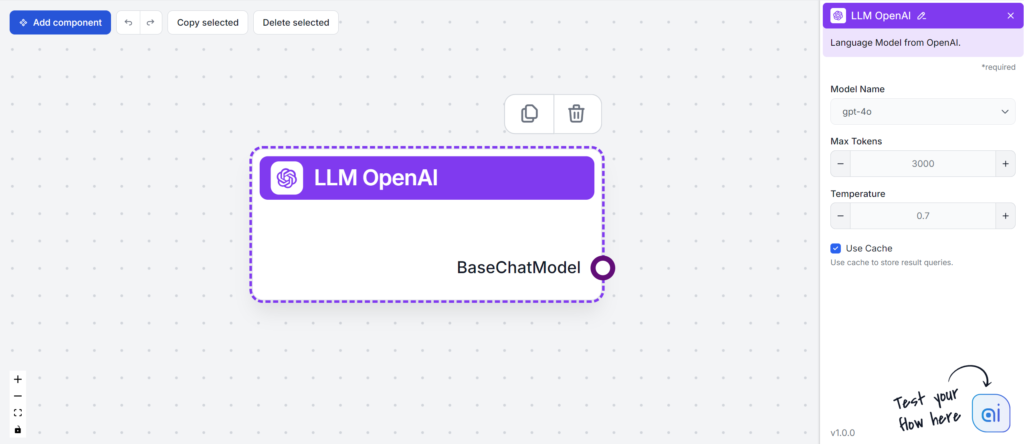

The LLM OpenAI component connects ChatGPT models to your flow. While the Generators and Agents are where the actual magic happens, LLM components allow you to control the model used. All components come with ChatGPT-4 by default. You can connect this component if you wish to change the model or gain more control over it.

LLM OpenAI Component Settings

Model Name

This is the model picker. Here, you’ll find all OpenAI models FlowHunt supports. ChatGPT offers a full list of differently capable and differently priced models. For example, using the less advanced and older GPT-3.5 will cost less than using the newest 4o, but the quality and speed of the output will suffer.

OpenAI models available in FlowHunt:

- GPT-4o – OpenAI’s latest and most popular model. A multimodal model capable of processing text, images, and audio and searching the web. Learn more here.

- GPT-4o Mini – A smaller, cost-effective version of GPT-4o, delivering enhanced performance over GPT-3.5 Turbo, with a 128K context window and over 60% cost reduction. See how it handles tasks.

- o1 Mini – A streamlined version of the o1 model, designed for complex reasoning tasks, offering a balance between performance and efficiency. See how it fared in our testing.

- o1 Preview – An advanced model with enhanced reasoning capabilities, excelling in complex problem-solving, particularly in coding and scientific reasoning, available in preview form. See how it thinks and handles tasks.

- gpt-4-vision-preview – preview model that accepts both text and image inputs, supporting features like JSON mode and parallel function calling, enhancing multimodal interaction capabilities. Find out more here.

- GPT-3.5 Turbo – A legacy version of GPT, great for simple tasks while not breaking the bank. See our AI Agent testing to compare it against newer models.

When choosing the right model for the task, consider the quality and speed the task requires. Older models are great for saving money on simple bulk tasks and chatting. If you’re generating content or searching the web, we suggest you opt for a newer, more refined model.

Max Tokens

Tokens represent the individual units of text the model processes and generates. Token usage varies with models, and a single token can be anything from words or subwords to a single character. Models are usually priced in millions of tokens.

The max tokens setting limits the total number of tokens that can be processed in a single interaction or request, ensuring the responses are generated within reasonable bounds. The default limit is 4,000 tokens, which is the optimal size for summarizing documents and several sources to generate an answer.

Temperature

Temperature controls the variability of answers, ranging from 0 to 1.

A temperature of 0.1 will make the responses very to the point but potentially repetitive and deficient.

A high temperature of 1 allows for maximum creativity in answers but creates the risk of irrelevant or even hallucinatory responses.

For example, the recommended temperature for a customer service bot is between 0.2 and 0.5. This level should keep the answers relevant and to the script while allowing for a natural level of variation in responses.

How To Add The LLM OpenAI To Your Flow

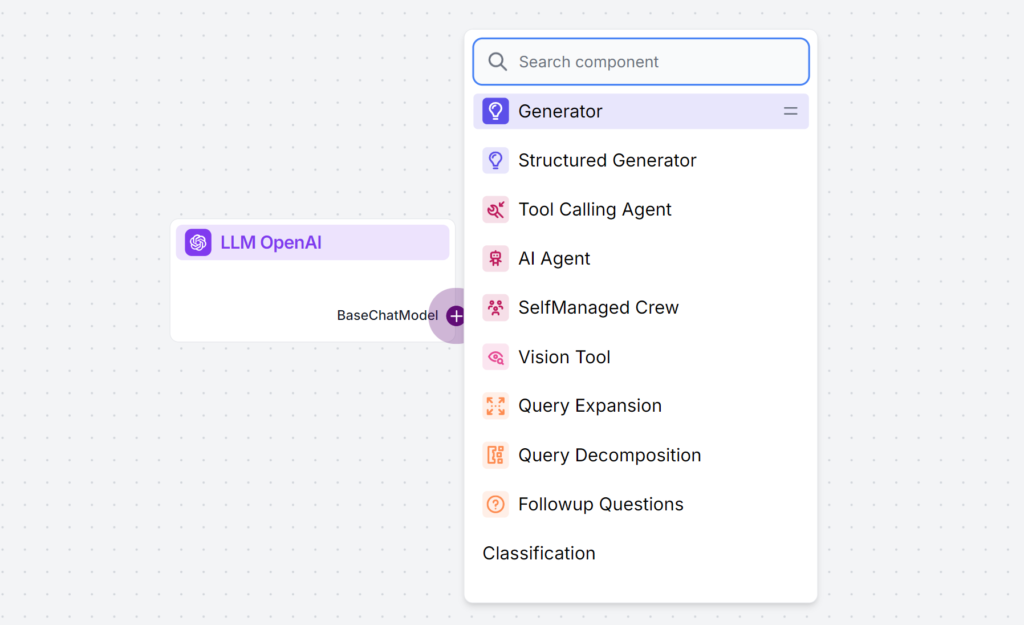

You’ll notice that all LLM components only have an output handle. Input doesn’t pass through the component, as it only represents the model, while the actual generation happens in AI Agents and Generators.

The LLM handle is always purple. The LLM input handle is found on any component that uses AI to generate text or process data. You can see the options by clicking the handle:

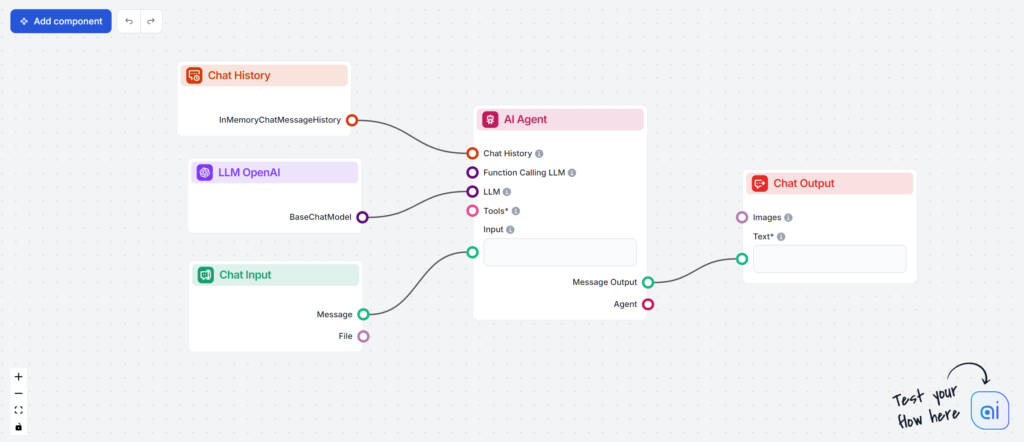

This allows you to create all sorts of tools. Let’s see the component in action. Here’s a simple Agent-powered chatbot Flow using o1 Preview to generate responses. You can think of it as a basic ChatGPT chatbot.

This simple Chatbot Flow includes:

- Chat input: Represents the message a user sends in chat.

- Chat history: Ensures the chatbot can remember and factor in past responses.

- Chat output: Represent the chatbot’s final response.

- AI Agent: An autonomous AI agent that generates responses.

- LLM OpenAI: The connection to OpenAI’s text generation models.

Frequently Asked Questions

What are LLMs?

Large language models are types of AI trained to process, understand, and generate human-like text. A common example is ChatGPT, which can provide elaborate responses to almost any query.

Can I connect an LLM straight to Chat Output?

No, the LLM component is only a representation of the AI model. It changes the model the Generator will use. The default LLM in the Generator is ChatGPT-4o.

What LLMs are available in Flows?

At the moment, only the OpenAI component is available. We plan to add more in the future.

Do I need to add an LLM to my flow?

No, Flows are a versatile feature with many use cases without the need for an LLM. You add an LLM if you want to build a conversational chatbot that generates text answers freely.

Does the LLM OpenAI component generate the answer?

Not really. The component only represents the model and creates rules for it to follow. It’s the generator component that connects it to the input and runs the query through the LLM to create output.