Without a good prompt, all bots would act the same way and often miss the mark with their answers. Prompts give instructions and context to the language model, helping it to understand what kind of text it should produce.

What is the Prompt component?

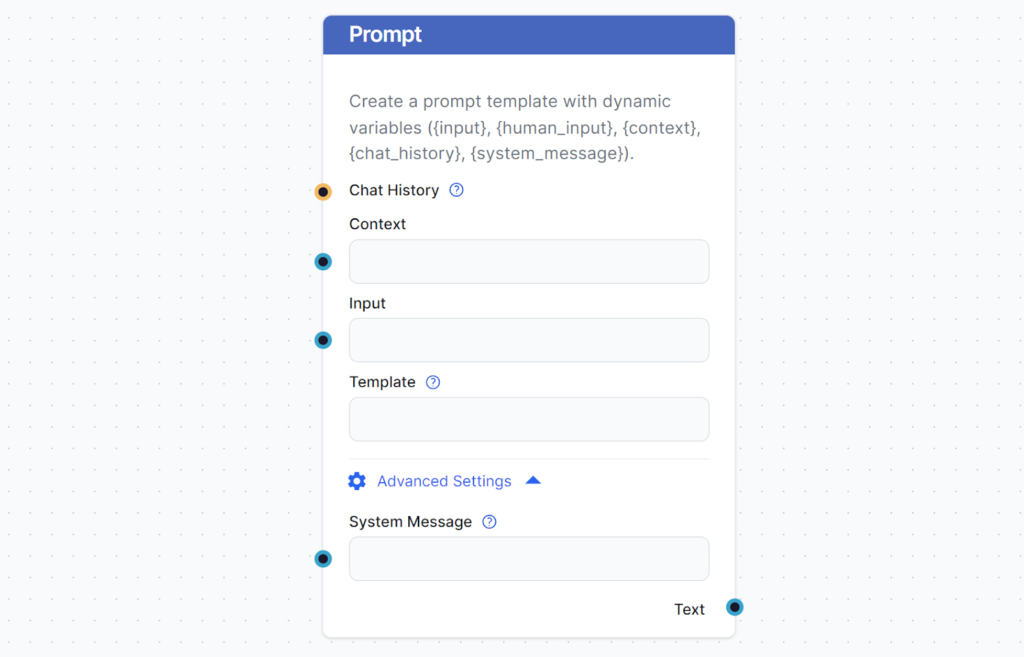

The prompt component allows you to instruct the LLM by specifying context, roles, and behaviors. This helps you ensure the answers are appropriate and relevant.

System Message

You want your bot to have a personality and a role to ensure it understands how to interpret conversations and answer appropriately. You set this up in the system message field, which is editable. Simply write who the bot is and how it should answer.

Follow this template:

“You are a {role} that {behavior}.”

For example:

“You are a helpful customer service bot that talks like a medieval knight.”

You can test your chatbot by typing, “How can you help me?” If everything else was set up correctly, your chatbot should respond appropriately, following its defined role and behavior.

Template

This is an advanced optional setting. You can create prompt templates with specified variables to control the chat output fully. For example:

As a skilled SEO, analyze the content of the URL and come up with a title up to 65 characters long.— Content of the URL —{input}Task: Generate a Title similar to others using {human_input} query. Don’t change {human_input} in the new title. NEW TITLE:

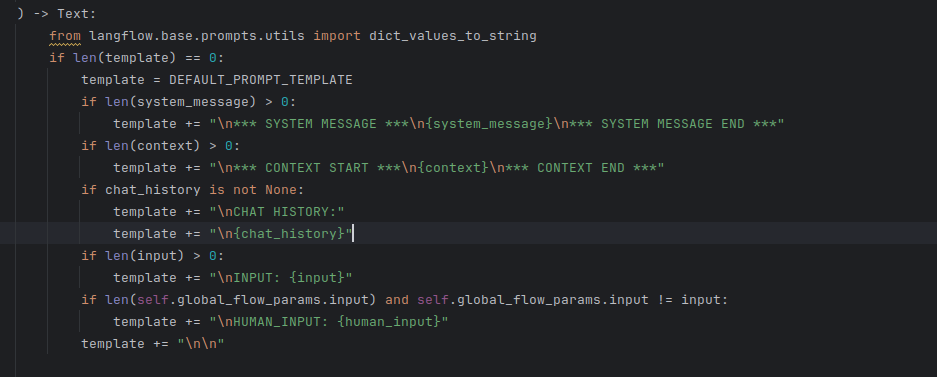

The default prompt template looks like this:

The default prompt copies the same structure as the component’s settings. You can override the settings by altering and using the variables in the template field. Creating your own templates gives you greater control over the output.

How to connect the Prompt component to your flow

The prompt is an optional component that further modifies and specifies the final output. It needs several components to be connected:

- Chat History: Connecting Chat History is not required but is often beneficial. Remembering previous messages makes future replies more relevant.

- Context: Any meaningful text output can serve as context. The most common choice is to connect the knowledge from retrievers.

- Input: Only the Chat Input component can be connected here.

This component’s output is text that can be connected to various components. Most of the time, you immediately follow up with the Generator component to connect the prompt to an LLM.

Example

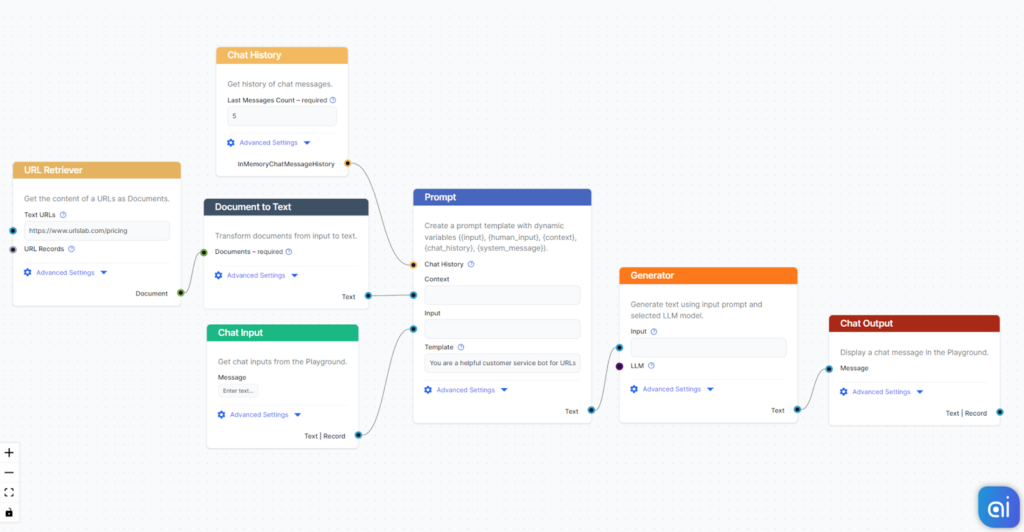

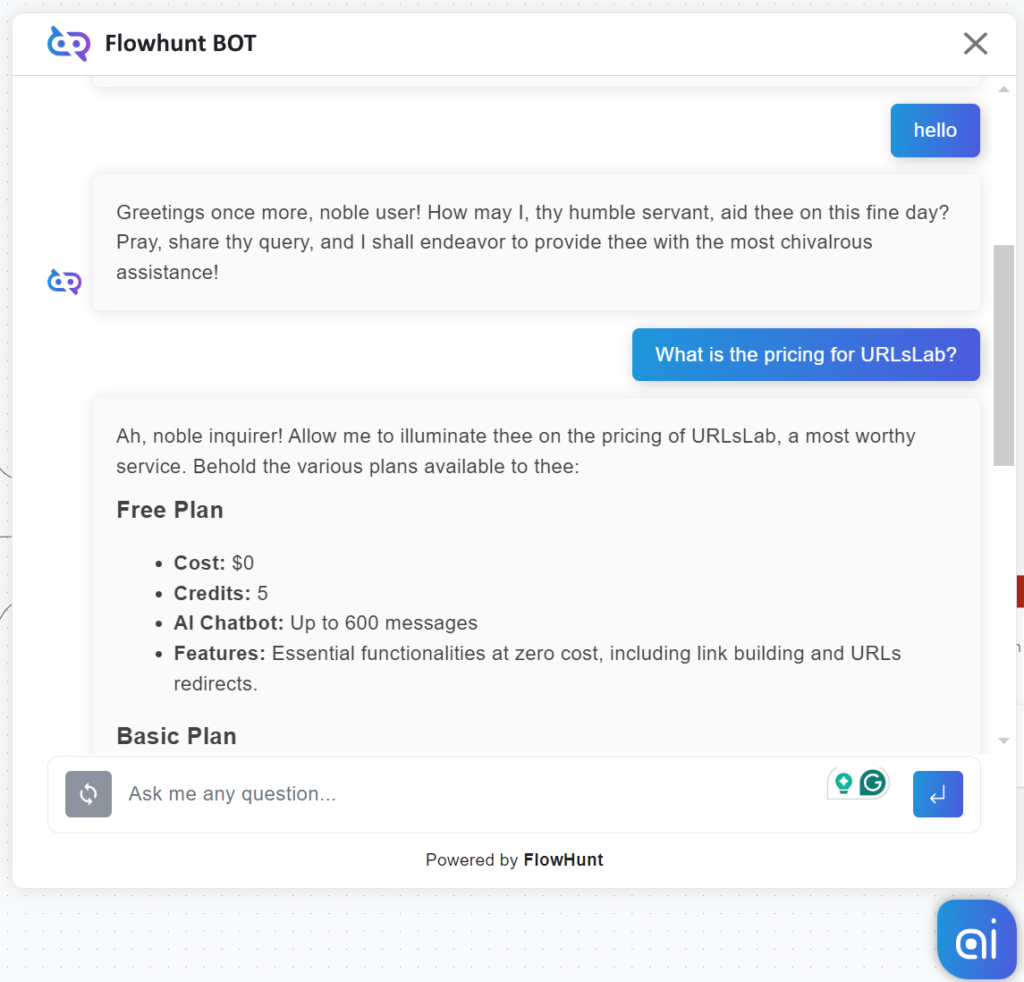

Let’s create a very simple bot. We’ll expand on the medieval knight bot example from earlier. While it talks funny, its main mission is to be a helpful customer service bot, and we want it to provide relevant information.

Let’s ask our bot a typical customer service question. We’ll ask about the pricing of URLsLab. To get a successful answer, we need to:

- Give it context: For the purposes of this example, let’s use the URL retriever component to give it a page with all the necessary information.

- Connect input: Input is always the human message from the Chat Input component.

- Chat History: It’s optional, but let’s connect it for this particular case.

- Template: We’ll keep the prompt, “You are a helpful customer service bot that talks like a medieval knight.”. Prompts can be much more elaborate than this. See our prompts library for inspiration.

- Add Generator: We want the bot to have conversational abilities. To do this, connect the Generator. The Prompt serves as input for the generator.

The resulting flow will look something like this:

It’s time to test the knowledge of our medieval knight bot. The URL we gave it is the pricing for URLsLab. So let’s ask about it:

Our bot now uses pompous old-timey language to answer basic queries. But more importantly, notice how the bot adheres to its central role as a helpful customer service bot. Lastly, it successfully uses the information from the specified URL.

Frequently Asked Questions

What is the Prompt component?

The Prompt component gives the bot instructions and context, ensuring it replies in the desired way.

Do I always need to include Prompt in my flows?

Including it for many use cases is a great idea, but the component is optional.

What is the system message?

While it may sound scary, this setting is very intuitive. It’s an editable text field where you set the personality and role of the bot. Simply fill in the blanks: “You are a {role} that {behavior}.”. For example, “You are a helpful customer service bot that talks like a medieval knight.”.

Do I need to always include Prompt in my flows?

It’s certainly a great idea to include it for many use cases, but the component is optional.