Some user questions are so complex they could make a seasoned customer service professional stop dead in their tracks. The key is to break these questions down into smaller ones you need to address before answering the big question at hand. That’s what the Task decomposition does for AI.

What is the Task Decomposition component?

Task decomposition allows the AI to better understand complex queries by breaking them into smaller subqueries. This allows the bot to provide more detailed and focused answers. Without it, the Chatbot may answer vaguely or incompletely.

How to connect the Task Decomposition to your Flow

The Task Decomposition should be connected right after the chat input. This allows all the subsequent components to utilize the decomposed query.

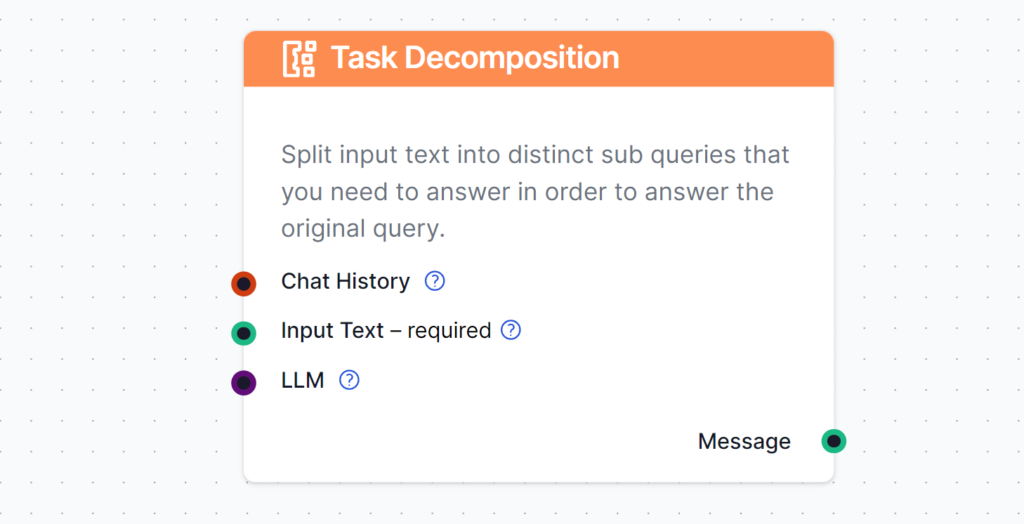

Input

- Chat history (optional): Let the previous messages give context to the query.

- Input Text (required): The human message that gets decomposed.

- LLM (optional): Connect an LLM component to change the LLM model used for Task Decomposition. By default, the component uses ChatGPT-4o.

TIP: Task Decomposition works just as well with older LLMs. Switching to a less advanced model cuts costs and makes the chatbot faster.

Output

The output is simplified subqueries of the original query. This output is suitable for any component asking for Input or Context.

Frequently Asked Questions

What is the Task Decomposition component?

The Task Decomposition breaks complex and compound queries into simple subqueries that are easier to address. This way, it can provide more detailed and focused answers.

What happens if I don’t use the Task Decomposition?

Task Decomposition isn’t necessary for all Flows. Its primary use is for creating customer service bots and other uses where the input requires a step-by-step approach to complex input. Using Task Decomposition ensures detailed and highly-relevant answers. Without it, the bot may resort to vague answers.

What’s the difference between Query Expansion and Task Decomposition?

Both help the bot understand the query better. Task Decomposition takes complex or compound queries and breaks them down into smaller executable steps. On the other hand, Query Expansion appends incomplete or faulty queries, making them clear and complete.