With the ability to learn from large data sets and understand the nuances of language, AI is proving to be great at translation. Compared to previous rule-based systems, AI is also making strides in understanding context and providing contextually relevant translations.

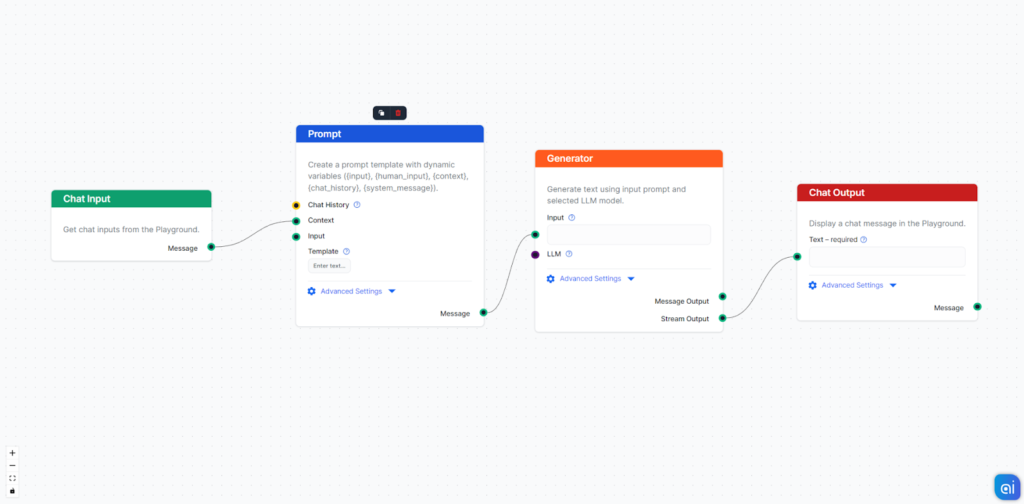

This is the simplest translation flow possible. You send the input message containing the text you want to translate and receive the translation as an answer. You don’t need to clarify the task in your message, as it’s already specified in the Prompt.

Give it a try:

The Flow uses the following Prompt template:

“You are a professional translator.

Your task is to translate INPUT TEXT into English.

Keep the same formatting of input text in the translated text.

TRANSLATE into English:

{input}”

Feel free to experiment with the Prompt, adding more instructions or even changing the translation language.

Components breakdown

- Chat Input: This is the message you send in the chat. It’s the starting point of any flow.

- Prompt: Passes detailed instructions, roles, and behaviors to the AI.

- Generator: Connects AI for text output generation. It uses ChatGPT-4 as default.

- Chat Output: Component representing the chatbot’s answer.

Next steps

There are several ways you can improve this flow:

- Change the Prompt template to reflect your exact needs.

- Connect an LLM component to the Generator to pick a different LLM model.

- Connect Chat History to let previous messages give context to later translations.

- Add Retrievers to provide context and ensure translation accuracy for niche topics.

- Add Splitters to help the bot efficiently answer complicated queries.