Document reranking is the process of reordering retrieved documents based on their relevance to the user’s query. After an initial retrieval step, reranking refines the results by evaluating each document’s relevance more precisely, ensuring that the most pertinent documents are prioritized.

What Is Retrieval-Augmented Generation (RAG)?

Retrieval-Augmented Generation (RAG) is an advanced framework that combines the capabilities of Large Language Models (LLMs) with information retrieval systems. In RAG, when a user submits a query, the system retrieves relevant documents from a vast knowledge base and feeds this information into the LLM to generate informed and contextually accurate responses. This approach enhances the accuracy and relevance of AI-generated content by grounding it in factual data.

Understanding Query Expansion

What Is Query Expansion?

Definition

Query expansion is a technique used in information retrieval to enhance the effectiveness of search queries. It involves augmenting the original query with additional terms or phrases that are semantically related. The primary goal is to bridge the gap between the user’s intent and the language used in relevant documents, thereby improving the retrieval of pertinent information.

How It Works

In practice, query expansion can be achieved through various methods:

- Synonym Expansion: Incorporating synonyms of the query terms to cover different expressions of the same concept.

- Related Terms: Adding terms that are contextually related but not direct synonyms.

- LLM-Based Expansion: Using Large Language Models to generate expanded queries by predicting words or phrases that are relevant to the original query.

By expanding the query, the retrieval system can cast a wider net, capturing documents that might have been missed due to variations in terminology or phrasing.

Why Is Query Expansion Important in RAG Systems?

Improving Recall

Recall refers to the ability of the retrieval system to find all relevant documents. Query expansion enhances recall by:

- Retrieving documents that use different terms to describe the same concept.

- Capturing documents that cover related subtopics or broader aspects of the query.

Addressing Query Ambiguity

Users often submit short or ambiguous queries. Query expansion helps in:

- Clarifying the user’s intent by considering multiple interpretations.

- Providing a more comprehensive search by including various aspects of the topic.

Enhancing Document Matching

By including additional relevant terms, the system increases the likelihood of matching the query with documents that might use different vocabulary, thus improving the overall effectiveness of the retrieval process.

Methods of Query Expansion

1. Pseudo-Relevance Feedback (PRF)

What Is PRF?

Pseudo-Relevance Feedback is an automatic query expansion method where the system assumes that the top-ranked documents from an initial search are relevant. It extracts significant terms from these documents to refine the original query.

How PRF Works

- Initial Query Execution: The user’s original query is executed, and top documents are retrieved.

- Term Extraction: Key terms from these documents are identified based on frequency or significance.

- Query Refinement: The original query is expanded with these key terms.

- Second Retrieval: The expanded query is used to perform a new search, ideally retrieving more relevant documents.

Benefits and Drawbacks

- Benefits: Improves recall without requiring user intervention.

- Drawbacks: If the initial results contain irrelevant documents, the expansion may include misleading terms, reducing precision.

2. LLM-Based Query Expansion

Leveraging Large Language Models

With advancements in AI, LLMs like GPT-3 and GPT-4 can generate sophisticated query expansions by understanding context and semantics.

How LLM-Based Expansion Works

- Hypothetical Answer Generation: The LLM generates a hypothetical answer to the original query.

- Contextual Expansion: The answer provides additional context and related terms.

- Combined Query: The original query and the LLM’s output are combined to form an expanded query.

Example

Original Query: “What were the most important factors that contributed to increases in revenue?”

LLM-Generated Answer:

“In the fiscal year, several key factors contributed to the significant increase in the company’s revenue, including successful marketing campaigns, product diversification, customer satisfaction initiatives, strategic pricing, and investments in technology.”

Expanded Query:

“Original Query: What were the most important factors that contributed to increases in revenue?

Hypothetical Answer: [LLM-Generated Answer]”

Advantages

- Deep Understanding: Captures nuanced relationships and concepts.

- Customization: Tailors the expansion to the specific domain or context.

Challenges

- Computational Resources: May require significant processing power.

- Over-Expansion: Risk of adding irrelevant or too many terms.

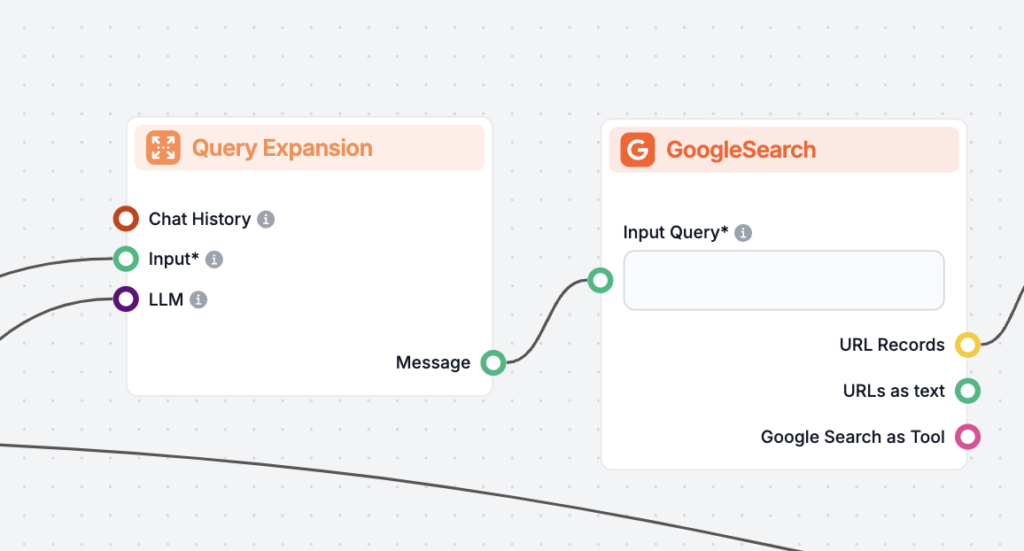

Implementing Query Expansion in RAG Systems

Step-by-Step Process

- User Query Input: The system receives the user’s original query.

- LLM-Based Expansion:

- The system prompts the LLM to generate a hypothetical answer or related queries.

- Example Prompt: “Provide a detailed answer or related queries for: [User’s Query]”

- Combine Queries:

- The original query and the expanded content are combined.

- This ensures that the expanded query remains relevant to the user’s intent.

- Use in Retrieval:

- The expanded query is used to retrieve documents from the knowledge base.

- This can be done using keyword search, semantic search, or a combination.

Benefits in RAG Systems

- Enhanced Retrieval: More relevant documents are retrieved, providing better context for the LLM.

- Improved User Experience: Users receive more accurate and informative responses.

Understanding Document Reranking

Why Reranking Is Necessary

- Initial Retrieval Limitations: Initial retrieval methods may rely on broad measures of similarity, which might not capture nuanced relevance.

- Overcoming Noise: Query expansion may introduce less relevant documents; reranking filters these out.

- Optimizing Context for LLMs: Providing the most relevant documents enhances the quality of the LLM’s generated responses.

Methods for Document Reranking

1. Cross-Encoder Models

Overview

Cross-encoders are neural network models that take a pair of inputs (the query and a document) and output a relevance score. Unlike bi-encoders, which encode query and document separately, cross-encoders process them jointly, allowing for richer interaction between the two.

How Cross-Encoders Work

- Input Pairing: Each document is paired with the query.

- Joint Encoding: The model encodes the pair together, capturing interactions.

- Scoring: Outputs a relevance score for each document.

- Ranking: Documents are sorted based on these scores.

Advantages

- High Precision: Provides more accurate relevance assessments.

- Contextual Understanding: Captures complex relationships between query and document.

Challenges

- Computationally Intensive: Requires significant processing power, especially for large document sets.

2. ColBERT (Late Interaction Models)

What Is ColBERT?

ColBERT (Contextualized Late Interaction over BERT) is a retrieval model designed to balance efficiency and effectiveness. It uses a late interaction mechanism that allows for detailed comparison between query and document tokens without heavy computational costs.

How ColBERT Works

- Token-Level Encoding: Separately encodes query and document tokens using BERT.

- Late Interaction: During scoring, compares query and document tokens using similarity measures.

- Efficiency: Enables pre-computation of document embeddings.

Advantages

- Efficient Scoring: Faster than full cross-encoders.

- Effective Retrieval: Maintains high retrieval quality.

Use Cases

- Suitable for large-scale retrieval where computational resources are limited.

3. FlashRank

Overview

FlashRank is a lightweight and fast reranking library that uses state-of-the-art cross-encoders. It’s designed to integrate easily into existing pipelines and improve reranking performance with minimal overhead.

Features

- Ease of Use: Simple API for quick integration.

- Speed: Optimized for rapid reranking.

- Accuracy: Employs effective models for high-quality reranking.

Example Usage

from flashrank import Ranker, RerankRequest

query = 'What were the most important factors that contributed to increases in revenue?'

ranker = Ranker(model_name="ms-marco-MiniLM-L-12-v2")

rerank_request = RerankRequest(query=query, passages=documents)

results = ranker.rerank(rerank_request)

Benefits

- Simplifies Reranking: Abstracts the complexities of model handling.

- Optimizes Performance: Balances speed and accuracy effectively.

Implementing Document Reranking in RAG Systems

Process

- Initial Retrieval:

- Use the expanded query to retrieve a set of candidate documents.

- Reranking:

- Apply a reranking model (e.g., Cross-Encoder, ColBERT) to assess the relevance of each document.

- Selection:

- Select the top-ranked documents to use as context for the LLM.

Considerations

- Computational Resources: Reranking can be resource-intensive; balance is needed between performance and cost.

- Model Selection: Choose models that suit the application’s requirements in terms of accuracy and efficiency.

- Integration: Ensure that reranking fits seamlessly into the existing pipeline.

Combining Query Expansion and Document Reranking in RAG

Synergy Between Query Expansion and Reranking

Complementary Techniques

- Query Expansion broadens the search scope, retrieving more documents.

- Document Reranking refines these results, focusing on the most relevant ones.

Benefits of Combining

- Enhanced Recall and Precision: Together, they improve both the quantity and quality of retrieved documents.

- Robust Retrieval: Addresses the limitations of each method when used alone.

- Improved LLM Output: Provides better context, leading to more accurate and informative responses.

How They Work Together

- User Query Input:

- The original query is received.

- Query Expansion:

- The query is expanded using methods like LLM-based expansion.

- This results in a more comprehensive search query.

- Initial Retrieval:

- The expanded query is used to retrieve a broad set of documents.

- Document Reranking:

- Reranking models evaluate and reorder the documents based on relevance to the original query.

- Context Provision:

- The top-ranked documents are provided to the LLM as context.

- Response Generation:

- The LLM generates a response informed by the most relevant documents.

Practical Implementation Steps

Example Workflow

- Query Expansion with LLM:

def expand_query(query): prompt = f"Provide additional related queries for: '{query}'" expanded_queries = llm.generate(prompt) expanded_query = ' '.join([query] + expanded_queries) return expanded_query - Initial Retrieval:

documents = vector_db.retrieve_documents(expanded_query) - Document Reranking:

from sentence_transformers import CrossEncoder cross_encoder = CrossEncoder('cross-encoder/ms-marco-MiniLM-L-6-v2') pairs = [[query, doc.text] for doc in documents] scores = cross_encoder.predict(pairs) ranked_docs = [doc for _, doc in sorted(zip(scores, documents), reverse=True)] - Selecting Top Documents:

top_documents = ranked_docs[:top_k] - Generating Response with LLM:

context = '\n'.join([doc.text for doc in top_documents]) prompt = f"Answer the following question using the context provided:\n\nQuestion: {query}\n\nContext:\n{context}" response = llm.generate(prompt)

Monitoring and Optimization

- Performance Metrics: Regularly measure retrieval effectiveness using metrics like precision, recall, and relevance scores.

- Feedback Loops: Incorporate user feedback to improve query expansion and reranking strategies.

- Resource Management: Optimize computational resources, possibly by caching results or limiting the number of reranked documents.

Use Cases and Examples

Example 1: Enhancing AI Chatbots for Customer Support

Scenario

A company uses an AI chatbot to handle customer queries about their products and services. Customers often ask questions in various ways, using different terminologies or phrases.

Challenges

- Varying customer language and terminology.

- Need for accurate and prompt responses to maintain customer satisfaction.

Implementation

- Query Expansion:

- The chatbot expands customer queries to include synonyms and related terms.

- For example, if a customer asks, “How can I fix my gadget?”, the query is expanded to include terms like “repair device”, “troubleshoot appliance”, etc.

- Document Reranking:

- Retrieved help articles and FAQs are reranked to prioritize the most relevant solutions.

- Cross-encoders assess the relevance of each document to the customer’s specific issue.

Benefits

- Improved accuracy and relevance of responses.

- Enhanced customer satisfaction and reduced support resolution times.

Example 2: Optimizing AI-Powered Research Tools

Scenario

Researchers use an AI assistant to find relevant academic papers, data, and insights for their work.

Challenges

- Complex queries with specialized terminology.

- Large volumes of academic literature to sift through.

Implementation

- Query Expansion:

- The assistant uses LLMs to expand queries with related concepts and synonyms.

- A query like “quantum entanglement applications” is expanded to include “uses of quantum entanglement”, “quantum computing entanglement”, etc.

- Document Reranking:

- Academic papers are reranked based on relevance to the refined query.

- Models like ColBERT efficiently handle the large document set.

Benefits

- Researchers find the most relevant papers quickly.

- Saves time and accelerates the research process.

Example 3: AI Automation in Legal Document Retrieval

Scenario

Law firms use AI systems to retrieve legal documents, case laws, and statutes relevant to specific cases.

Challenges

- Legal queries often involve complex language and require high precision.

- Need to ensure that no pertinent documents are missed.

Implementation

- Query Expansion:

- Expands queries using legal synonyms and related terms.

- A query like “breach of contract remedies” is expanded to include “consequences of contract violation”, “legal actions for contract breach”, etc.

- Document Reranking:

- Legal documents are reranked to prioritize the most relevant and authoritative sources.

- Cross-encoders evaluate the precise relevance to the legal query.

Benefits

- Lawyers access the most pertinent legal documents efficiently.

- Enhances the quality of legal research and case preparation.

Implementation Considerations

Challenges and Mitigation Strategies

Over-Expansion in Query Expansion

- Challenge: Including too many or irrelevant terms can dilute the query, leading to less relevant results.

- Mitigation:

- Limit the number of expanded terms.

- Use confidence scores from the LLM to select the most relevant expansions.

- Implement filtering mechanisms to exclude irrelevant terms.

Computational Resources for Reranking

- Challenge: Reranking large sets of documents can be computationally intensive.

- Mitigation:

- Limit the number of documents to rerank (e.g., top 100 instead of top 1000).

- Use efficient models like MiniLM or optimized versions of cross-encoders.

- Employ parallel processing or distributed computing frameworks.

Quality Control of Expanded Queries and Reranked Documents

- Challenge: Ensuring that the expansions and reranked documents genuinely improve retrieval effectiveness.

- Mitigation:

- Continuously evaluate retrieval performance using appropriate metrics.

- Incorporate user feedback to refine expansion and reranking strategies.

- Use domain-specific models or fine-tune models on relevant data.

Practical Tips for Implementation

- Model Selection:

- Choose models that balance accuracy and efficiency.

- Consider domain-specific models for specialized applications.

- Parameter Tuning:

- Adjust the number of expanded terms, documents retrieved, and documents reranked based on performance metrics.

- Caching and Reuse:

- Cache expanded queries and reranking scores where possible to reduce repeated computations.

- Monitoring and Logging:

- Implement robust logging to monitor the performance and identify bottlenecks.

- Use analytics to understand usage patterns and areas for improvement.

- Ethical Considerations:

- Be mindful of biases in LLMs and retrieval systems.

- Ensure that expansions and reranking do not inadvertently introduce inappropriate content.

Connection to AI, AI Automation, and Chatbots

Enhancing AI Systems with Query Expansion and Reranking

Improved Understanding and Responsiveness

- Chatbots and Virtual Assistants:

- Better understand user intent through query expansion.

- Provide more accurate and helpful responses by utilizing reranked documents.

AI Automation in Various Domains

- Customer Support Automation:

- Automate responses to common queries with higher accuracy.

- Reduce the workload on human support agents.

- Content Recommendation Systems:

- Deliver more relevant content by understanding user preferences more deeply.

- Knowledge Management:

- Assist organizations in organizing and accessing knowledge bases efficiently.

Benefits to AI Automation and Chatbots

- Enhanced User Experience:

- Users receive timely and accurate information.

- Increases user trust and engagement with AI systems.

- Operational Efficiency:

- Reduces the need for manual intervention.

- Streamlines processes in customer service, research, and other areas.

- Scalability:

- Systems can handle a larger volume of queries without sacrificing quality.

Future Implications

- Continuous Learning:

- AI systems can learn from interactions, improving query expansion and reranking over time.

- Personalization:

- Tailor responses based on individual user behavior and preferences.

- Integration with Other Technologies:

- Combining RAG with other AI advancements, such as multimodal inputs, to further enhance capabilities.

Research on Document Reranking with Query Expansion in RAG

- ARAGOG: Advanced RAG Output Grading

Published: 2024-04-01

Authors: Matouš Eibich, Shivay Nagpal, Alexander Fred-Ojala

This paper explores the integration of external knowledge into Large Language Model (LLM) outputs through Retrieval-Augmented Generation (RAG). The study addresses a gap in experimental comparisons of RAG methods. It evaluates various techniques and finds that Hypothetical Document Embedding (HyDE) and LLM reranking enhance retrieval precision. In contrast, approaches like Maximal Marginal Relevance and Multi-query methods did not outperform a baseline system. Sentence Window Retrieval proved effective for retrieval precision. The research highlights the potential of the Document Summary Index as a competent approach. Further information is accessible on their GitHub repository. - Don’t Forget to Connect! Improving RAG with Graph-based Reranking

Published: 2024-05-28

Authors: Jialin Dong, Bahare Fatemi, Bryan Perozzi, Lin F. Yang, Anton Tsitsulin

This study investigates the use of graph-based reranking in RAG systems to improve the performance of LLM responses. It introduces G-RAG, a reranker utilizing graph neural networks to connect documents and enhance semantic information. The paper demonstrates that G-RAG outperforms existing approaches with a smaller computational footprint and emphasizes the importance of reranking even with advanced LLMs. - RAG-Fusion: A New Take on Retrieval-Augmented Generation

Published: 2024-02-21

Author: Zackary Rackauckas

The research evaluates RAG-Fusion, which combines RAG with reciprocal rank fusion (RRF) to generate multiple queries, rerank them, and fuse documents. This method provides accurate and comprehensive answers by contextualizing original queries from different perspectives. However, some answers may deviate if the generated queries are not sufficiently relevant. The study advances AI and NLP applications, demonstrating significant global and multi-industry impacts.

Let AI Take The Guesswork Out of SEO

Boost your SEO with FlowHunt's AI tools! Research keywords, analyze competitors, create briefs & optimize content effortlessly. Try now!"

Discover hidden opportunities to boost your Google rankings with FlowHunt's Web Page Content Gap Analyzer. This powerful SEO tool compares your webpage with top competitors, providing a detailed report with actionable insights to enhance your content structure. Perfect for guiding your copywriter and improving SEO without generating new content. Visit now to elevate your SEO strategy!

Data-backed Content With No Fluff

Create data-driven content with FlowHunt’s AI tools. From idea generation to editing, streamline your process for engaging, SEO-optimized results!