Model interpretability refers to the ability to understand, explain, and trust the predictions and decisions made by machine learning models. It is a critical component in the realm of artificial intelligence, particularly in applications involving decision making, such as healthcare, finance, and autonomous systems. The concept is central to data science as it bridges the gap between complex computational models and human comprehension.

What is Model Interpretability?

Model interpretability is the degree to which a human can consistently predict the model’s results and understand the cause of a prediction. It involves understanding the relationship between input features and the outcomes produced by the model, allowing stakeholders to comprehend the reasons behind specific predictions. This understanding is crucial in building trust, ensuring compliance with regulations, and guiding decision-making processes. According to a framework discussed by Lipton (2016) and Doshi-Velez & Kim (2017), interpretability encompasses the ability to evaluate and obtain information from models that the objective alone cannot convey.

Global vs. Local Interpretability

Model interpretability can be categorized into two primary types:

- Global Interpretability: This provides an overall understanding of how a model operates, giving insight into its general decision-making process. It involves understanding the model’s structure, its parameters, and the relationships it captures from the dataset. This type of interpretability is crucial for evaluating the model’s behavior across a broad range of inputs.

- Local Interpretability: This focuses on explaining individual predictions, offering insights into why a model made a particular decision for a specific instance. Local interpretability helps in understanding the model’s behavior in particular scenarios and is essential for debugging and refining models. Methods like LIME and SHAP are often used to achieve local interpretability by approximating the model’s decision boundary around a specific instance.

Importance of Model Interpretability

Trust and Transparency

Interpretable models are more likely to be trusted by users and stakeholders. Transparency in how a model arrives at its decisions is crucial, especially in sectors like healthcare or finance, where decisions can have significant ethical and legal implications. Interpretability facilitates understanding and debugging, ensuring that models can be trusted and relied upon in critical decision-making processes.

Safety and Regulation Compliance

In high-stakes domains such as medical diagnostics or autonomous driving, interpretability is necessary to ensure safety and meet regulatory standards. For example, the General Data Protection Regulation (GDPR) in the European Union mandates that individuals have the right to an explanation of algorithmic decisions that significantly affect them. Model interpretability helps institutions adhere to these regulations by providing clear explanations of algorithmic outputs.

Bias Detection and Mitigation

Interpretability is vital for identifying and mitigating bias in machine learning models. Models trained on biased data can inadvertently learn and propagate societal biases. By understanding the decision-making process, practitioners can identify biased features and adjust the models accordingly, thus promoting fairness and equality in AI systems.

Debugging and Model Improvement

Interpretable models facilitate the debugging process by allowing data scientists to understand and rectify errors in predictions. This understanding can lead to model improvements and enhancements, ensuring better performance and accuracy. Interpretability aids in uncovering the underlying reasons for model errors or unexpected behavior, thereby guiding further model development.

Methods for Achieving Interpretability

Several techniques and approaches can be employed to enhance model interpretability, falling into two main categories: intrinsic and post-hoc methods.

Intrinsic Interpretability

This involves using models that are inherently interpretable due to their simplicity and transparency. Examples include:

- Linear Regression: Offers straightforward insights into how input features affect predictions, making it easy to understand and analyze.

- Decision Trees: Provide a visual and logical representation of decisions, making them easy to interpret and communicate to stakeholders.

- Rule-Based Models: Use a set of rules to make decisions, which can be directly analyzed and understood, offering clear insights into the decision-making process.

Post-hoc Interpretability

These methods apply to complex models post-training to make them more interpretable:

- LIME (Local Interpretable Model-agnostic Explanations): Provides local explanations by approximating the model’s predictions with interpretable models around the instance of interest, helping to understand specific predictions.

- SHAP (SHapley Additive exPlanations): Offers a unified measure of feature importance by considering the contribution of each feature to the prediction, thus providing insights into the model’s decision-making process.

- Partial Dependence Plots (PDPs): Visualize the relationship between a feature and the predicted outcome, marginalizing over other features, allowing for an understanding of feature effects.

- Saliency Maps: Highlight the areas in input data that most influence the predictions, commonly used in image processing to understand model focus.

Use Cases of Model Interpretability

Healthcare

In medical diagnostics, interpretability is crucial for validating AI predictions and ensuring that they align with clinical knowledge. Models used in diagnosing diseases or recommending treatment plans need to be interpretable to gain the trust of healthcare professionals and patients, facilitating better healthcare outcomes.

Finance

Financial institutions use machine learning for credit scoring, fraud detection, and risk assessment. Interpretability ensures compliance with regulations and helps in understanding financial decisions, making it easier to justify them to stakeholders and regulators. This is critical for maintaining trust and transparency in financial operations.

Autonomous Systems

In autonomous vehicles and robotics, interpretability is important for safety and reliability. Understanding the decision-making process of AI systems helps in predicting their behavior in real-world scenarios and ensures they operate within ethical and legal boundaries, which is essential for public safety and trust.

AI Automation and Chatbots

In AI automation and chatbots, interpretability helps in refining conversational models and ensuring they provide relevant and accurate responses. It aids in understanding the logic behind chatbot interactions and improving user satisfaction, thereby enhancing the overall user experience.

Challenges and Limitations

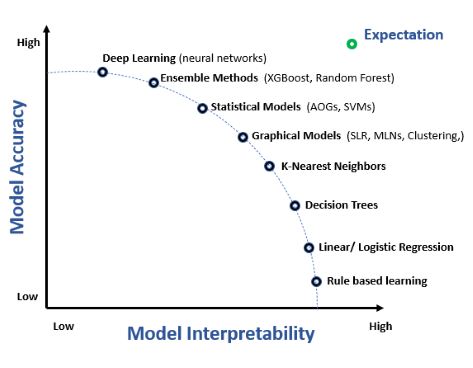

Trade-off Between Interpretability and Accuracy

There is often a trade-off between model interpretability and accuracy. Complex models like deep neural networks may offer higher accuracy but are less interpretable. Achieving a balance between the two is a significant challenge in model development, requiring careful consideration of application needs and stakeholder requirements.

Domain-Specific Interpretability

The level of interpretability required can vary significantly across different domains and applications. Models need to be tailored to the specific needs and requirements of the domain to provide meaningful and actionable insights. This involves understanding the domain-specific challenges and designing models that address these effectively.

Evaluation of Interpretability

Measuring interpretability is challenging as it is subjective and context-dependent. While some models may be interpretable to domain experts, they may not be understandable to laypersons. Developing standardized metrics for evaluating interpretability remains an ongoing research area, critical for advancing the field and ensuring the deployment of interpretable models.

Research on Model Interpretability

Model interpretability is a critical focus in machine learning as it allows for the understanding and trust in predictive models, particularly in fields like precision medicine and automated decision systems. Here are some pivotal studies exploring this area:

- Hybrid Predictive Model: When an Interpretable Model Collaborates with a Black-box Model

Authors: Tong Wang, Qihang Lin (Published: 2019-05-10)

This paper introduces a framework for creating a Hybrid Predictive Model (HPM) that marries the strengths of interpretable models and black-box models. The hybrid model substitutes the black-box model for parts of the data where high performance is unnecessary, enhancing transparency with minimal accuracy loss. The authors propose an objective function that weighs predictive accuracy, interpretability, and model transparency. The study demonstrates the hybrid model’s effectiveness in balancing transparency and predictive performance, especially in structured and text data scenarios. Read more - Machine Learning Model Interpretability for Precision Medicine

Authors: Gajendra Jung Katuwal, Robert Chen (Published: 2016-10-28)

This research highlights the importance of interpretability in machine learning models for precision medicine. It uses the Model-Agnostic Explanations algorithm to make complex models, like random forests, interpretable. The study applied this approach to the MIMIC-II dataset, predicting ICU mortality with 80% balanced accuracy and elucidating individual feature impacts, crucial for medical decision-making. Read more - The Definitions of Interpretability and Learning of Interpretable Models

Authors: Weishen Pan, Changshui Zhang (Published: 2021-05-29)

The paper proposes a new mathematical definition of interpretability in machine learning models. It defines interpretability in terms of human recognition systems and introduces a framework for training models that are fully human-interpretable. The study showed that such models not only provide transparent decision-making processes but are also more robust against adversarial attacks. Read more

Feature Engineering and Extraction

Explore how Feature Engineering and Extraction boost AI model performance by transforming raw data into valuable insights. Learn key techniques and examples!

Understanding AI Intent Classification

Unlock the potential of AI intent classification with FlowHunt. Enhance chatbot efficiency, customer support, and user experience today!

Data-backed Content With No Fluff

Create data-driven content with FlowHunt’s AI tools. From idea generation to editing, streamline your process for engaging, SEO-optimized results!

Understanding AI Reasoning: Types, Importance, and Applications

Explore AI reasoning types, importance, and applications in healthcare and beyond. Discover how AI enhances decision-making and innovation.