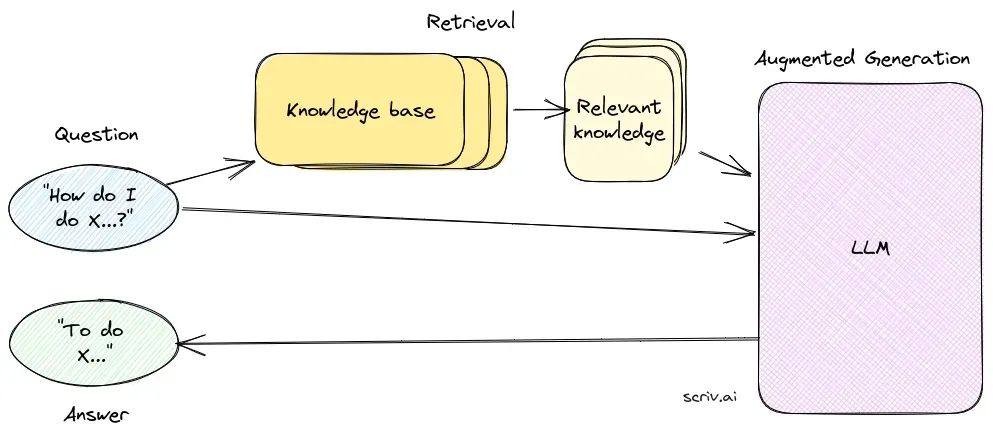

Question Answering with Retrieval-Augmented Generation (RAG) is an innovative method that combines the strengths of information retrieval and natural language generation. This hybrid approach enhances the capabilities of large language models (LLMs) by supplementing their responses with relevant, up-to-date information retrieved from external data sources. Unlike traditional methods that rely solely on pre-trained models, RAG dynamically integrates external data, allowing systems to provide more accurate and contextually relevant answers, particularly in domains requiring the latest information or specialized knowledge.

RAG optimizes the performance of LLMs by ensuring that answers are not only generated from an internal dataset but also informed by real-time, authoritative sources. This approach is crucial for question-answering tasks in dynamic fields where information is constantly evolving.

Core Components of RAG

1. Retrieval Component

The retrieval component is responsible for sourcing relevant information from vast datasets, typically stored in a vector database. This component employs semantic search techniques to identify and extract text segments or documents that are highly relevant to the user’s query.

- Vector Database: A specialized database that stores vector representations of documents. These embeddings facilitate efficient search and retrieval by matching the semantic meaning of the user’s query with relevant text segments.

- Semantic Search: Utilizes vector embeddings to find documents based on semantic similarities rather than simple keyword matching, improving the relevance and accuracy of retrieved information.

2. Generation Component

The generation component, usually an LLM such as GPT-3 or BERT, synthesizes an answer by combining the user’s original query with the retrieved context. This component is crucial for generating coherent and contextually appropriate responses.

- Language Models (LLMs): Trained to generate text based on input prompts, LLMs in RAG systems use retrieved documents as context to enhance the quality and relevance of generated answers.

Workflow of a RAG System

- Document Preparation: The system begins by loading a large corpus of documents, converting them into a format suitable for analysis. This often involves splitting documents into smaller, manageable chunks.

- Vector Embedding: Each document chunk is converted into a vector representation using embeddings generated by language models. These vectors are stored in a vector database to facilitate efficient retrieval.

- Query Processing: Upon receiving a user’s query, the system converts the query into a vector and performs a similarity search against the vector database to identify relevant document chunks.

- Contextual Answer Generation: The retrieved document chunks are combined with the user’s query and fed into the LLM, which generates a final, contextually enriched answer.

- Output: The system outputs an answer that is both accurate and relevant to the query, enriched with contextually appropriate information.

Advantages of RAG

- Improved Accuracy: By retrieving relevant context, RAG minimizes the risk of generating incorrect or outdated answers, a common issue with standalone LLMs.

- Dynamic Content: RAG systems can integrate the latest information from updated knowledge bases, making them ideal for domains that require current data.

- Enhanced Relevance: The retrieval process ensures that generated answers are tailored to the specific context of the query, improving response quality and relevance.

Use Cases

- Chatbots and Virtual Assistants: RAG-powered systems enhance chatbots and virtual assistants by providing accurate and context-aware responses, improving user interaction and satisfaction.

- Customer Support: In customer support applications, RAG systems can retrieve relevant policy documents or product information to provide precise answers to user queries.

- Content Creation: RAG models can generate documents and reports by integrating retrieved information, making them useful for automated content generation tasks.

- Educational Tools: In education, RAG systems can power learning assistants that provide explanations and summaries based on the latest educational content.

Technical Implementation

Implementing a RAG system involves several technical steps:

- Vector Storage and Retrieval: Use vector databases like Pinecone or FAISS to store and retrieve document embeddings efficiently.

- Language Model Integration: Integrate LLMs such as GPT-3 or custom models using frameworks like HuggingFace Transformers to manage the generation aspect.

- Pipeline Configuration: Set up a pipeline that manages the flow from document retrieval to answer generation, ensuring smooth integration of all components.

Challenges and Considerations

- Cost and Resource Management: RAG systems can be resource-intensive, requiring optimization to manage computational costs effectively.

- Factual Accuracy: Ensuring that retrieved information is accurate and up-to-date is crucial to prevent the generation of misleading answers.

- Complexity in Setup: The initial setup of RAG systems can be complex, involving multiple components that need careful integration and optimization.

Research on Question Answering with Retrieval-Augmented Generation (RAG)

Retrieval-Augmented Generation (RAG) is a method that enhances question-answering systems by combining retrieval mechanisms with generative models. Recent research has explored the efficacy and optimization of RAG in various contexts.

- In Defense of RAG in the Era of Long-Context Language Models: This paper argues for the continued relevance of RAG despite the emergence of long-context language models, which integrate longer text sequences into their processing. The authors propose an Order-Preserve Retrieval-Augmented Generation (OP-RAG) mechanism that optimizes RAG’s performance in handling long-context question-answering tasks. They demonstrate through experiments that OP-RAG can achieve high answer quality with fewer tokens compared to long-context models. Read more.

- CLAPNQ: Cohesive Long-form Answers from Passages in Natural Questions for RAG systems: This study introduces ClapNQ, a benchmark dataset designed for evaluating RAG systems in generating cohesive long-form answers. The dataset focuses on answers that are grounded in specific passages, without hallucinations, and encourages RAG models to adapt to concise and cohesive answer formats. The authors provide baseline experiments that reveal potential areas for improvement in RAG systems. Read more.

- Optimizing Retrieval-Augmented Generation with Elasticsearch for Enhanced Question-Answering Systems: The research integrates Elasticsearch into the RAG framework to boost the efficiency and accuracy of question-answering systems. Using the Stanford Question Answering Dataset (SQuAD) version 2.0, the study compares various retrieval methods and highlights the advantages of the ES-RAG scheme in terms of retrieval efficiency and accuracy, outperforming other methods by 0.51 percentage points. The paper suggests further exploration of the interaction between Elasticsearch and language models to enhance system responses. Read more.

A Question Answering from Image

Discover precise answers from images using FlowHunt's OCR and visual recognition. Quick, efficient, and user-friendly. Try it now!

Retrieval Augmented Generation (RAG)

Discover RAG, the AI framework enhancing text accuracy by combining retrieval systems with generative models. Explore more with FlowHunt today!