Transformers are a revolutionary type of neural network architecture that has transformed the field of artificial intelligence (AI), particularly in tasks involving natural language processing (NLP). Introduced in the seminal 2017 paper titled “Attention is All You Need” by Vaswani et al., transformers have quickly become a foundational element in AI models due to their ability to efficiently process and transform input sequences into output sequences.

Key Features

- Transformer Architecture: Unlike traditional models such as recurrent neural networks (RNNs) and convolutional neural networks (CNNs), transformers utilize a mechanism known as self-attention. This allows them to process all parts of a sequence simultaneously, rather than sequentially, enabling more efficient handling of complex data.

- Parallel Processing: This architecture facilitates parallel processing, significantly speeding up computation and allowing for the training of very large models. This is a major departure from RNNs, where processing is inherently sequential, thus slower.

- Attention Mechanism: Central to the transformer’s design, the attention mechanism enables the model to weigh the importance of different parts of the input data, thus capturing long-range dependencies more effectively. This ability to attend to different parts of the data sequence is what gives transformers their power and flexibility in various tasks.

Components of Transformer Architecture

Input Embeddings

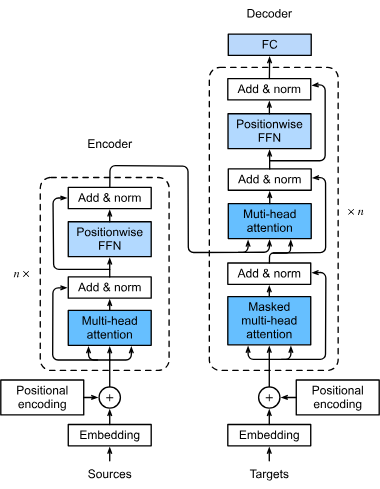

The first step in a transformer model’s processing pipeline involves converting words or tokens in an input sequence into numerical vectors, known as embeddings. These embeddings capture semantic meanings and are crucial for the model to understand the relationships between tokens. This transformation is essential as it allows the model to process text data in a mathematical form.

Positional Encoding

Transformers do not inherently process data in a sequential manner; therefore, positional encoding is used to inject information about the position of each token in the sequence. This is vital for maintaining the order of the sequence, which is crucial for tasks like language translation where context can be dependent on the sequence of words.

Multi-Head Attention

The multi-head attention mechanism is a sophisticated component of transformers that allows the model to focus on different parts of the input sequence simultaneously. By computing multiple attention scores, the model can capture various relationships and dependencies in the data, enhancing its ability to understand and generate complex data patterns.

Encoder-Decoder Structure

Transformers typically follow an encoder-decoder architecture:

- Encoder: Processes the input sequence and generates a representation that captures its essential features.

- Decoder: Takes this representation and generates the output sequence, often in a different domain or language. This structure is particularly effective in tasks such as language translation.

Feedforward Neural Networks

After the attention mechanism, the data passes through feedforward neural networks, which apply non-linear transformations to the data, helping the model learn complex patterns. These networks further process the data to refine the output generated by the model.

Layer Normalization and Residual Connections

These techniques are incorporated to stabilize and speed up the training process. Layer normalization ensures that the outputs remain within a certain range, facilitating efficient training of the model. Residual connections allow gradients to flow through the networks without vanishing, which enhances the training of deep neural networks.

How Transformers Work

Transformers operate on sequences of data, which could be words in a sentence or other sequential information. They apply self-attention to determine the relevance of each part of the sequence concerning others, enabling the model to focus on crucial elements that affect the output.

Self-Attention Mechanism

In self-attention, every token in the sequence is compared with every other token to compute attention scores. These scores indicate the significance of each token in the context of others, allowing the model to focus on the most relevant parts of the sequence. This is pivotal for understanding context and meaning in language tasks.

Transformer Blocks

These are the building blocks of a transformer model, consisting of self-attention and feedforward layers. Multiple blocks are stacked to form deep learning models capable of capturing intricate patterns in data. This modular design allows transformers to scale efficiently with the complexity of the task.

Advantages Over Other Models

Efficiency and Scalability

Transformers are more efficient than RNNs and CNNs due to their ability to process entire sequences at once. This efficiency enables scaling up to very large models, such as GPT-3, which has 175 billion parameters. The scalability of transformers allows them to handle vast amounts of data effectively.

Handling Long-Range Dependencies

Traditional models struggle with long-range dependencies due to their sequential nature. Transformers overcome this limitation through self-attention, which can consider all parts of the sequence simultaneously. This makes them particularly effective for tasks that require understanding context over long text sequences.

Versatility in Applications

While initially designed for NLP tasks, transformers have been adapted for various applications, including computer vision, protein folding, and even time-series forecasting. This versatility showcases the broad applicability of transformers across different domains.

Use Cases of Transformers

Natural Language Processing

Transformers have significantly improved the performance of NLP tasks such as translation, summarization, and sentiment analysis. Models like BERT and GPT are prominent examples that leverage transformer architecture to understand and generate human-like text, setting new benchmarks in NLP.

Machine Translation

In machine translation, transformers excel by understanding the context of words within a sentence, allowing for more accurate translations compared to previous methods. Their ability to process entire sentences at once enables more coherent and contextually accurate translations.

Protein Structure Analysis

Transformers can model the sequences of amino acids in proteins, aiding in the prediction of protein structures, which is crucial for drug discovery and understanding biological processes. This application underscores the potential of transformers in scientific research.

Time-Series Forecasting

By adapting the transformer architecture, it is possible to predict future values in time-series data, such as electricity demand forecasting, by analyzing past sequences. This opens new possibilities for transformers in fields like finance and resource management.

Types of Transformer Models

Bidirectional Encoder Representations from Transformers (BERT)

BERT models are designed to understand the context of a word by looking at its surrounding words, making them highly effective for tasks that require understanding word relationships in a sentence. This bidirectional approach allows BERT to capture context more effectively than unidirectional models.

Generative Pre-trained Transformers (GPT)

GPT models are autoregressive, generating text by predicting the next word in a sequence based on the preceding words. They are widely used in applications like text completion and dialogue generation, demonstrating their capability to produce human-like text.

Vision Transformers

Initially developed for NLP, transformers have been adapted for computer vision tasks. Vision transformers process image data as sequences, allowing them to apply transformer techniques to visual inputs. This adaptation has led to advancements in image recognition and processing.

Challenges and Future Directions

Computational Demands

Training large transformer models requires substantial computational resources, often involving vast datasets and powerful hardware like GPUs. This presents a challenge in terms of cost and accessibility for many organizations.

Ethical Considerations

As transformers become more prevalent, issues such as bias in AI models and ethical use of AI-generated content are becoming increasingly important. Researchers are working on methods to mitigate these issues and ensure responsible AI development, highlighting the need for ethical frameworks in AI research.

Expanding Applications

The versatility of transformers continues to open new avenues for research and application, from enhancing AI-driven chatbots to improving data analysis in fields like healthcare and finance. The future of transformers holds exciting possibilities for innovation across various industries.

In conclusion, transformers represent a significant advancement in AI technology, offering unparalleled capabilities in processing sequential data. Their innovative architecture and efficiency have set a new standard in the field, propelling AI applications to new heights. Whether it’s language understanding, scientific research, or visual data processing, transformers continue to redefine what’s possible in the realm of artificial intelligence.

Research on Transformers in AI

Transformers have revolutionized the field of artificial intelligence, particularly in natural language processing and understanding. The paper “AI Thinking: A framework for rethinking artificial intelligence in practice” by Denis Newman-Griffis (published in 2024) explores a novel conceptual framework called AI Thinking. This framework models key decisions and considerations involved in AI use across disciplinary perspectives, addressing competencies in motivating AI use, formulating AI methods, and situating AI in sociotechnical contexts. It aims to bridge divides between academic disciplines and reshape the future of AI in practice. Read more.

Another significant contribution is seen in “Artificial intelligence and the transformation of higher education institutions” by Evangelos Katsamakas et al. (published in 2024), which uses a complex systems approach to map the causal feedback mechanisms of AI transformation in higher education institutions (HEIs). The study discusses the forces driving AI transformation and its impact on value creation, emphasizing the need for HEIs to adapt to AI technology advances while managing academic integrity and employment changes. Read more.

In the realm of software development, the paper “Can Artificial Intelligence Transform DevOps?” by Mamdouh Alenezi and colleagues (published in 2022) examines the intersection of AI and DevOps. The study highlights how AI can enhance the functionality of DevOps processes, facilitating efficient software delivery. It underscores the practical implications for software developers and businesses in leveraging AI to transform DevOps practices. Read more.

Natural Language Processing (NLP)

Explore how Natural Language Processing (NLP) bridges human-computer interaction. Discover its key aspects, workings, and applications today!

Understanding AI Intent Classification

Unlock the potential of AI intent classification with FlowHunt. Enhance chatbot efficiency, customer support, and user experience today!