What is OpenAI Whisper?

OpenAI Whisper is an advanced automatic speech recognition (ASR) system developed by OpenAI. Designed to transcribe spoken language into written text, Whisper leverages deep learning techniques and a vast dataset to achieve unparalleled accuracy and versatility in natural language processing tasks. Released in September 2022, Whisper has quickly become a renowned tool in the field of AI, offering robust performance in multilingual speech recognition, language identification, and speech translation.

Understanding OpenAI Whisper

Is Whisper a Model or a System?

OpenAI Whisper can be considered both a model and a system, depending on the context.

- As a model, Whisper comprises neural network architectures specifically designed for ASR tasks. It includes several models of varying sizes, ranging from 39 million to 1.55 billion parameters. Larger models offer better accuracy but require more computational resources.

- As a system, Whisper encompasses not only the model architecture but also the entire infrastructure and processes surrounding it. This includes the training data, pre-processing methods, and the integration of various tasks it can perform, such as language identification and translation.

Core Capabilities of Whisper

Whisper’s primary function is to transcribe speech into text output. It excels in:

- Multilingual Speech Recognition: Supports 99 languages, making it a powerful tool for global applications.

- Speech Translation: Capable of translating speech from any supported language into English text.

- Language Identification: Automatically detects the language spoken without prior specification.

- Robustness to Accents and Background Noise: Trained on diverse data, Whisper handles varied accents and noisy environments effectively.

How Does OpenAI Whisper Work?

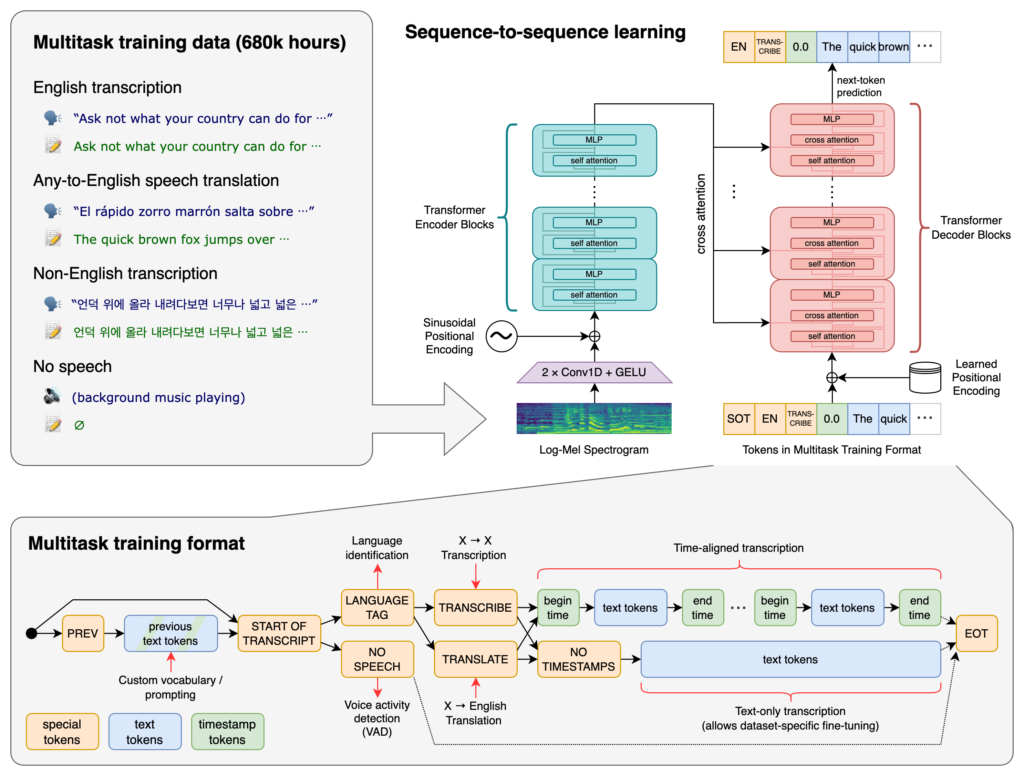

Transformer Architecture

At the heart of Whisper lies the Transformer architecture, specifically an encoder-decoder model. Transformers are neural networks that excel in processing sequential data and understanding context over long sequences. Introduced in the “Attention is All You Need” paper in 2017, Transformers have become foundational in many NLP tasks.

Whisper’s process involves:

- Audio Preprocessing: Input audio is segmented into 30-second chunks and converted into a log-Mel spectrogram, capturing the frequency and intensity of the audio signals over time.

- Encoder: Processes the spectrogram to generate a numerical representation of the audio.

- Decoder: Utilizes a language model to predict the sequence of text tokens (words or subwords) corresponding to the audio input.

- Use of Special Tokens: Incorporates special tokens to handle tasks like language identification, timestamps, and task-specific directives (e.g., transcribe or translate).

Training on Multilingual Multitask Supervised Data

Whisper was trained on a massive dataset of 680,000 hours of supervised data collected from the web. This includes:

- Multilingual Data: Approximately 117,000 hours of the data are in 99 different languages, enhancing the model’s ability to generalize across languages.

- Diverse Acoustic Conditions: The dataset contains audio from various domains and environments, ensuring robustness to different accents, dialects, and background noises.

- Multitask Learning: By training on multiple tasks simultaneously (transcription, translation, language identification), Whisper learns shared representations that improve overall performance.

Applications and Use Cases

Transcription Services

- Virtual Meetings and Note-Taking: Automate transcription in platforms catering to general audio and specific industries like education, healthcare, journalism, and legal services.

- Content Creation: Generate transcripts for podcasts, videos, and live streams to enhance accessibility and provide text references.

Language Translation

- Global Communication: Translate speech in one language to English text, facilitating cross-lingual communication.

- Language Learning Tools: Assist learners in understanding pronunciation and meaning in different languages.

AI Automation and Chatbots

- Voice-Enabled Chatbots: Integrate Whisper into chatbots to allow voice interactions, enhancing user experience.

- AI Assistants: Develop assistants that can understand and process spoken commands in various languages.

Accessibility Enhancements

- Closed Captioning: Generate captions for video content, aiding those with hearing impairments.

- Assistive Technologies: Enable devices to transcribe and translate speech for users needing language support.

Call Centers and Customer Support

- Real-Time Transcription: Provide agents with real-time transcripts of customer calls for better service.

- Sentiment Analysis: Analyze transcribed text to gauge customer sentiment and improve interactions.

Advantages of OpenAI Whisper

Multilingual Support

With coverage of 99 languages, Whisper stands out in its ability to handle diverse linguistic inputs. This multilingual capacity makes it suitable for global applications and services targeting international audiences.

High Accuracy and Robustness

Trained on extensive supervised data, Whisper achieves high accuracy rates in transcription tasks. Its robustness to different accents, dialects, and background noises makes it reliable in various real-world scenarios.

Versatility in Tasks

Beyond transcription, Whisper can perform:

- Language Identification: Detects the spoken language without prior input.

- Speech Translation: Translates speech from one language to English text.

- Timestamp Generation: Provides phrase-level timestamps in transcriptions.

Open-Source Availability

Released as open-source software, Whisper allows developers to:

- Customize and Fine-Tune: Adjust the model for specific tasks or domains.

- Integrate into Applications: Embed Whisper into products and services without licensing constraints.

- Contribute to the Community: Enhance the model and share improvements.

Limitations and Considerations

Computational Requirements

- Resource Intensive: Larger models require significant computational power and memory (up to 10 GB VRAM for the largest model).

- Processing Time: Transcription speed may vary, with larger models processing slower than smaller ones.

Prone to Hallucinations

- Inaccurate Transcriptions: Whisper may sometimes produce text that wasn’t spoken, known as hallucinations. This is more likely in certain languages or with poor audio quality.

Limited Support for Non-English Languages

- Data Bias: A significant portion of the training data is in English, potentially affecting accuracy in less-represented languages.

- Fine-Tuning Needed: Additional training may be required to improve performance in specific languages or dialects.

Input Limitations

- Audio Length: Whisper processes audio in 30-second chunks, which may complicate transcribing longer continuous audio.

- File Size Restrictions: The open-source model may have limitations on input file sizes and formats.

OpenAI Whisper in AI Automation and Chatbots

Enhancing User Interactions

By integrating Whisper into chatbots and AI assistants, developers can enable:

- Voice Commands: Allowing users to interact using speech instead of text.

- Multilingual Support: Catering to users who prefer or require different languages.

- Improved Accessibility: Assisting users with disabilities or those who are unable to use traditional input methods.

Streamlining Workflows

- Automated Transcriptions: Reducing manual effort in note-taking and record-keeping.

- Data Analysis: Converting spoken content into text for analysis, monitoring, and insights.

Examples in Practice

- Virtual Meeting Bots: Tools that join online meetings to transcribe discussions in real-time.

- Customer Service Bots: Systems that understand and respond to spoken requests, improving customer experience.

- Educational Platforms: Applications that transcribe lectures or provide translations for students.

Alternatives to OpenAI Whisper

Open-Source Alternatives

- Mozilla DeepSpeech: An open-source ASR engine allowing custom model training.

- Kaldi: A toolkit widely used in both research and industry for speech recognition.

- Wav2vec: Meta AI’s system for self-supervised speech processing.

Commercial APIs

- Google Cloud Speech-to-Text: Offers speech recognition with comprehensive language support.

- Microsoft Azure AI Speech: Provides speech services with customization options.

- AWS Transcribe: Amazon’s speech recognition service with features like custom vocabulary.

Specialized Providers

- Gladia: Offers a hybrid and enhanced Whisper architecture with additional capabilities.

- AssemblyAI: Provides speech-to-text APIs with features like content moderation.

- Deepgram: Offers real-time transcription with custom model training options.

Factors to Consider When Choosing Whisper

Accuracy and Speed

- Trade-Offs: Larger models offer higher accuracy but require more resources and are slower.

- Testing: Assess performance with real-world data relevant to your application.

Volume of Audio

- Scalability: Consider hardware and infrastructure needs for processing large volumes.

- Batch Processing: Implement methods to handle large datasets efficiently.

Advanced Features

- Additional Functionalities: Evaluate if features like live transcription or speaker diarization are needed.

- Customization: Determine the effort required to implement additional features.

Language Support

- Target Languages: Verify the model’s performance in the languages relevant to your application.

- Fine-Tuning: Plan for potential additional training for less-represented languages.

Expertise and Resources

- Technical Expertise: Ensure your team has the skills to implement and adapt the model.

- Infrastructure: Evaluate the hardware requirements and hosting capabilities.

Cost Considerations

- Open-Source vs. Commercial: Balance the initial cost savings of open-source with potential long-term expenses in maintenance and scaling.

- Total Cost of Ownership: Consider hardware, development time, and ongoing support costs.

How is Whisper Used in Python?

Whisper is implemented as a Python library, allowing seamless integration into Python-based projects. Using Whisper in Python involves setting up the appropriate environment, installing necessary dependencies, and utilizing the library’s functions to transcribe or translate audio files.

Setting Up Whisper in Python

Before using Whisper, you need to prepare your development environment by installing Python, PyTorch, FFmpeg, and the Whisper library itself.

Prerequisites

- Python: Version 3.8 to 3.11 is recommended.

- PyTorch: A deep learning framework required to run the Whisper model.

- FFmpeg: A command-line tool for handling audio and video files.

- Whisper Library: The Python package provided by OpenAI.

Step 1: Install Python and PyTorch

If you don’t have Python installed, download it from the official website. To install PyTorch, you can use pip:

pip install torch

Alternatively, visit the PyTorch website for specific installation instructions based on your operating system and Python version.

Step 2: Install FFmpeg

Whisper requires FFmpeg to process audio files. Install FFmpeg using the appropriate package manager for your operating system.

Ubuntu/Debian:

sudo apt update && sudo apt install ffmpeg

MacOS (with Homebrew):

brew install ffmpeg

Windows (with Chocolatey):

choco install ffmpeg

Step 3: Install the Whisper Library

Install the Whisper Python package using pip:

pip install -U openai-whisper

To install the latest version directly from the GitHub repository:

pip install git+https://github.com/openai/whisper.git

Note for Windows Users

Ensure that Developer Mode is enabled:

- Go to Settings.

- Navigate to Privacy & Security > For Developers.

- Turn on Developer Mode.

Available Models and Specifications

Whisper offers several models that vary in size and capabilities. The models range from tiny to large, each balancing speed and accuracy differently.

| Size | Parameters | English-only Model | Multilingual Model | Required VRAM | Relative Speed |

|---|---|---|---|---|---|

| tiny | 39 M | tiny.en | tiny | ~1 GB | ~32x |

| base | 74 M | base.en | base | ~1 GB | ~16x |

| small | 244 M | small.en | small | ~2 GB | ~6x |

| medium | 769 M | medium.en | medium | ~5 GB | ~2x |

| large | 1550 M | N/A | large | ~10 GB | 1x |

Choosing the Right Model

- English-only Models (

.en): Optimized for English transcription, offering improved performance for English audio. - Multilingual Models: Capable of transcribing multiple languages, suitable for global applications.

- Model Size: Larger models provide higher accuracy but require more computational resources. Select a model that fits your hardware capabilities and performance requirements.

Using Whisper in Python

After setting up your environment and installing the necessary components, you can start using Whisper in your Python projects.

Importing the Library and Loading a Model

Begin by importing the Whisper library and loading a model:

import whisper

# Load the desired model

model = whisper.load_model("base")

Replace "base" with the model name that suits your application.

Transcribing Audio Files

Whisper provides a straightforward transcribe function to convert audio files into text.

Example: Transcribing an English Audio File

# Transcribe the audio file

result = model.transcribe("path/to/english_audio.mp3")

# Print the transcription

print(result["text"])

Explanation

model.transcribe(): Processes the audio file and outputs a dictionary containing the transcription and other metadata.result["text"]: Accesses the transcribed text from the result.

Translating Audio to English

Whisper can translate audio from various languages into English.

Example: Translating Spanish Audio to English

# Transcribe and translate Spanish audio to English

result = model.transcribe("path/to/spanish_audio.mp3", task="translate")

# Print the translated text

print(result["text"])

Explanation

task="translate": Instructs the model to translate the audio into English rather than transcribe it verbatim.

Specifying the Language

While Whisper can automatically detect the language, specifying it can improve accuracy and speed.

Example: Transcribing French Audio

# Transcribe French audio by specifying the language

result = model.transcribe("path/to/french_audio.wav", language="fr")

# Print the transcription

print(result["text"])

Detecting the Language of Audio

Whisper can identify the language spoken in an audio file using the detect_language method.

Example: Language Detection

# Load and preprocess the audio

audio = whisper.load_audio("path/to/unknown_language_audio.mp3")

audio = whisper.pad_or_trim(audio)

# Convert to log-Mel spectrogram

mel = whisper.log_mel_spectrogram(audio).to(model.device)

# Detect language

_, probs = model.detect_language(mel)

language = max(probs, key=probs.get)

print(f"Detected language: {language}")

Explanation

whisper.load_audio(): Loads the audio file.whisper.pad_or_trim(): Adjusts the audio length to fit the model’s input requirements.whisper.log_mel_spectrogram(): Converts audio to the format expected by the model.model.detect_language(): Returns probabilities for each language, identifying the most likely language spoken.

Advanced Usage and Customization

For more control over the transcription process, you can use lower-level functions and customize decoding options.

Using the decode Function

The decode function allows you to specify options such as language, task, and whether to include timestamps.

Example: Custom Decoding Options

# Set decoding options

options = whisper.DecodingOptions(language="de", without_timestamps=True)

# Decode the audio

result = whisper.decode(model, mel, options)

# Print the recognized text

print(result.text)

Processing Live Audio Input

You can integrate Whisper to transcribe live audio input from a microphone.

Example: Transcribing Live Microphone Input

import whisper

import sounddevice as sd

# Load the model

model = whisper.load_model("base")

# Record audio from the microphone

duration = 5 # seconds

fs = 16000 # Sampling rate

print("Recording...")

audio = sd.rec(int(duration * fs), samplerate=fs, channels=1, dtype='float32')

sd.wait() # Wait until recording is finished

print("Recording finished.")

# Convert the NumPy array to a format compatible with Whisper

audio = audio.flatten()

# Transcribe the audio

result = model.transcribe(audio)

# Print the transcription

print(result["text"])

Explanation

sounddevice: A library used to capture audio from the microphone.- Recording Parameters: Adjust

durationandfsto control the recording length and sample rate. - Audio Conversion: Flatten the audio array to match the expected input format.

Handling Different Audio Formats

Whisper supports various audio file formats. Ensure the files are correctly loaded and processed.

Example: Transcribing a WAV File

result = model.transcribe("path/to/audio_file.wav")

print(result["text"])

Use Cases in AI Automation and Chatbots

Whisper’s capabilities open up numerous possibilities in AI automation and chatbot development.

Voice-Enabled Chatbots

By integrating Whisper with chatbot platforms, you can create chatbots that understand and respond to voice inputs.

Workflow:

- User Speaks: The user provides input through speech.

- Speech Recognition: Whisper transcribes the speech to text.

- Processing: The chatbot processes the text input and generates a response.

- Response Delivery: The response is delivered back to the user, either as text or synthesized speech.

Example:

import whisper

import pyttsx3 # For speech synthesis

# Initialize text-to-speech engine

tts_engine = pyttsx3.init()

# Load Whisper model

model = whisper.load_model("base")

# Capture audio input (as shown in previous examples)

# ...

# Transcribe audio input

result = model.transcribe("path/to/audio_input.wav")

user_input = result["text"]

# Process user input with chatbot logic (placeholder)

response_text = "Here is the response to your query."

# Synthesize speech from response

tts_engine.say(response_text)

tts_engine.runAndWait()

Transcription Services

Whisper can be employed to develop transcription services for:

- Meeting Notes: Automatically transcribe meetings and generate summaries.

- Lecture Captions: Provide real-time captions for educational content.

- Media Subtitles: Generate subtitles for videos and podcasts.

Language Learning Applications

Develop tools that assist language learners by transcribing and translating speech, helping them improve pronunciation and comprehension.

Features:

- Pronunciation Feedback: Compare the learner’s speech with correct pronunciations.

- Vocabulary Building: Transcribe and highlight new words.

- Translation Practice: Translate spoken phrases to and from the target language.

Voice-Controlled Automation Systems

Implement voice commands for controlling systems and devices.

Applications:

- Smart Home Devices: Control lights, thermostats, and appliances using voice commands.

- Accessibility Tools: Assist users with disabilities by providing voice interfaces.

- Automated Assistants: Facilitate hands-free operation in industrial or medical settings.

Performance Considerations

When integrating Whisper into applications, consider the trade-offs between model size, accuracy, and computational requirements.

Model Size and Accuracy

- Larger Models (

medium,large): Offer higher accuracy but require more VRAM and are slower. - Smaller Models (

tiny,base): Faster and require less memory but may have reduced accuracy, especially with complex or noisy inputs.

Hardware Requirements

Ensure your hardware meets the VRAM requirements for the chosen model:

- GPUs: Utilize GPUs for faster inference times.

- CPUs: Whisper can run on CPUs but with significantly longer processing times.

Inference Speed

- Relative Speed: Indicates how much faster a model is compared to the

largemodel. - Batch Processing: Process multiple audio files in a batch to optimize resource usage.

Optimization Tips

- GPU Utilization: Move the model to GPU if available:

model.to('cuda') - Audio Length: Trim or segment long audio files to reduce processing time.

- Language Specification: Specify the language to improve speed and accuracy.

Error Handling and Best Practices

Implement robust error handling to ensure your application handles unexpected situations gracefully.

Common Errors

- FileNotFoundError: The specified audio file does not exist.

- RuntimeError: Insufficient memory or hardware limitations.

- ValueError: Incorrect parameter values or unsupported audio formats.

Handling Exceptions

Use try-except blocks to catch and handle exceptions.

Example:

try:

result = model.transcribe("path/to/audio.wav")

except FileNotFoundError:

print("Audio file not found.")

except RuntimeError as e:

print(f"Runtime error: {e}")

except Exception as e:

print(f"An unexpected error occurred: {e}")

Logging and Monitoring

- Logging: Keep logs of transcriptions and errors for debugging and analytics.

- Monitoring: Monitor resource usage to prevent crashes due to memory overflows.

Examples and Use Cases in Detail

Example 1: Transcribing a Podcast Episode

Transcribe a podcast episode to create a textual version for accessibility or content repurposing.

# Load the model

model = whisper.load_model("medium")

# Transcribe the podcast

result = model.transcribe("path/to/podcast_episode.mp3")

# Save the transcript

with open("podcast_transcript.txt", "w") as f:

f.write(result["text"])

Example 2: Creating Subtitles for a Video

Generate subtitles for a video file.

# Load the model

model = whisper.load_model("small")

# Transcribe the video

result = model.transcribe("path/to/video_file.mp4")

# Save the subtitles in SRT format

with open("video_subtitles.srt", "w") as f:

for segment in result["segments"]:

# Write each subtitle segment with timing

start = segment["start"]

end = segment["end"]

text = segment["text"]

f.write(f"{start} --> {end}\n{text}\n\n")

Example 3: Real-Time Translation Assistant

Develop an application that listens to speech in one language and provides real-time translation into English.

import whisper

import sounddevice as sd

# Load the model

model = whisper.load_model("base")

def real_time_translation(duration=5):

fs = 16000 # Sampling rate

print("Listening...")

audio = sd.rec(int(duration * fs), samplerate=fs, channels=1, dtype='float32')

sd.wait()

print("Processing...")

# Flatten the audio array

audio = audio.flatten()

# Transcribe and translate the audio

result = model.transcribe(audio, task="translate")

# Print the translation

print("Translation:")

print(result["text"])

# Run the translation

real_time_translation()

Example 4: Voice Command Recognition for Automation

Implement voice commands to control an application or device.

import whisper

# Load the model

model = whisper.load_model("tiny")

# List of recognized commands

commands = {

"turn on the lights": "lights_on",

"turn off the lights": "lights_off",

"play music": "play_music",

"stop music": "stop_music"

}

# Transcribe voice command

result = model.transcribe("path/to/voice_command.wav")

command_text = result["text"].lower()

# Match command

action = commands.get(command_text, None)

if action:

print(f"Executing action: {action}")

# Execute the corresponding action

# For example, send a signal to the smart home system

else:

print("Command not recognized.")

Integrating Whisper with AI Chatbots

Whisper can enhance chatbots by enabling voice interaction, making them more accessible and user-friendly.

Steps to Integrate Whisper with a Chatbot

- Capture Voice Input: Use a microphone to record the user’s speech.

- Transcribe Speech: Use Whisper to convert speech to text.

- Process Input: Pass the text to the chatbot’s natural language processing (NLP) engine.

- Generate Response: The chatbot formulates a response.

- Text-to-Speech Conversion: Convert the chatbot’s text response back into speech using a text-to-speech (TTS) engine.

- Play Audio Response: Output the audio response to the user.

Example: Simple Voice Chatbot Implementation

import whisper

import pyttsx3

import speech_recognition as sr

# Initialize recognition and TTS engines

recognizer = sr.Recognizer()

tts_engine = pyttsx3.init()

# Load Whisper model

model = whisper.load_model("base")

def voice_chatbot():

with sr.Microphone() as source:

print("Please say something...")

audio_data = recognizer.listen(source)

print("Recognizing...")

# Save the audio to a WAV file

with open("user_input.wav", "wb") as f:

f.write(audio_data.get_wav_data())

# Transcribe the audio using Whisper

result = model.transcribe("user_input.wav")

user_input = result["text"]

print(f"User said: {user_input}")

# Process input with chatbot logic (placeholder)

response = f"You said: {user_input}. How can I assist you further?"

# Vocalize the response

tts_engine.say(response)

tts_engine.runAndWait()

# Run the chatbot

voice_chatbot()

Explanation

- Speech Recognition: Captures the user’s speech via the microphone.

- Whisper Transcription: Converts the audio input to text.

- Chatbot Logic: Placeholder logic echoes the user’s input.

- Text-to-Speech: Converts the chatbot’s response back to speech.

Best Practices for Using Whisper

- Specify Language When Possible: Improves accuracy and reduces processing time.

- Use Appropriate Model Size: Balance between resource constraints and required accuracy.

- Optimize Audio Quality: Clear audio inputs yield better transcription results.

- Handle Exceptions: Anticipate and manage potential errors gracefully.

- Keep Dependencies Updated: Ensure that all libraries and tools are up-to-date.

Research on OpenAI Whisper

OpenAI Whisper is a significant advancement in automatic speech recognition (ASR) technology. Several scientific studies have explored its applications and enhancements across different languages and dialects.

- Whispering in Norwegian: Navigating Orthographic and Dialectic Challenges (Link to paper): This study introduces NB-Whisper, a version of OpenAI’s Whisper fine-tuned for Norwegian ASR. It demonstrates significant improvements in converting spoken Norwegian into text and in translating other languages into Norwegian. The research highlights improvements from a Word Error Rate (WER) of 10.4 to 6.6 on the Fleurs Dataset and from 6.8 to 2.2 on the NST dataset. This showcases the potential of fine-tuning Whisper for specific linguistic challenges.

- Self-Supervised Models in Automatic Whispered Speech Recognition (Link to paper): This paper addresses the challenge of recognizing whispered speech using a novel approach with the self-supervised WavLM model. It highlights the limitations of conventional ASR systems, including OpenAI Whisper, in dealing with whispered speech due to its unique acoustic properties. The study achieved a reduced WER of 9.22% with WavLM, compared to Whisper’s 18.8%, emphasizing the need for tailored acoustic modeling.

- Adapting OpenAI’s Whisper for Speech Recognition on Code-Switch Mandarin-English SEAME and ASRU2019 Datasets (Link to paper): This research explores the adaptation of Whisper for code-switching between Mandarin and English. It finds that minimal adaptation data (as low as 1 to 10 hours) can yield significant performance improvements. The study also examines language prompting strategies, finding that adaptation with code-switch data consistently enhances Whisper’s performance.

- Evaluating OpenAI’s Whisper ASR for Punctuation Prediction and Topic Modeling (Link to paper): This paper evaluates Whisper’s capabilities in punctuation prediction and topic modeling for Portuguese language applications, particularly in the context of life histories recorded by the Museum of the Person. While previous ASR models had limitations in punctuation accuracy, Whisper demonstrates improvements, indicating its potential for enhancing human-machine interaction in Portuguese.

Speak AI Language Tutor Review

Discover Speak, the AI-driven language tutor transforming fluency with real-time feedback, personalized learning, and proven effectiveness."

Translator with URLsLab integration

Get precise AI translations with URLsLab, preserving HTML structure and formatting. Effortlessly translate web content while keeping URLs and emails intact!