Word embeddings are sophisticated representations of words in a continuous vector space where semantically similar words are mapped to nearby points. Unlike traditional methods such as one-hot encoding, which tend to be high-dimensional and sparse, word embeddings capture the context of a word in a document, its semantic and syntactic relationships, and its relative importance. This method is particularly powerful because it allows words with similar meanings to have similar representations, which is crucial for various natural language processing (NLP) tasks.

Importance in Natural Language Processing (NLP)

Word embeddings are pivotal in NLP for several reasons:

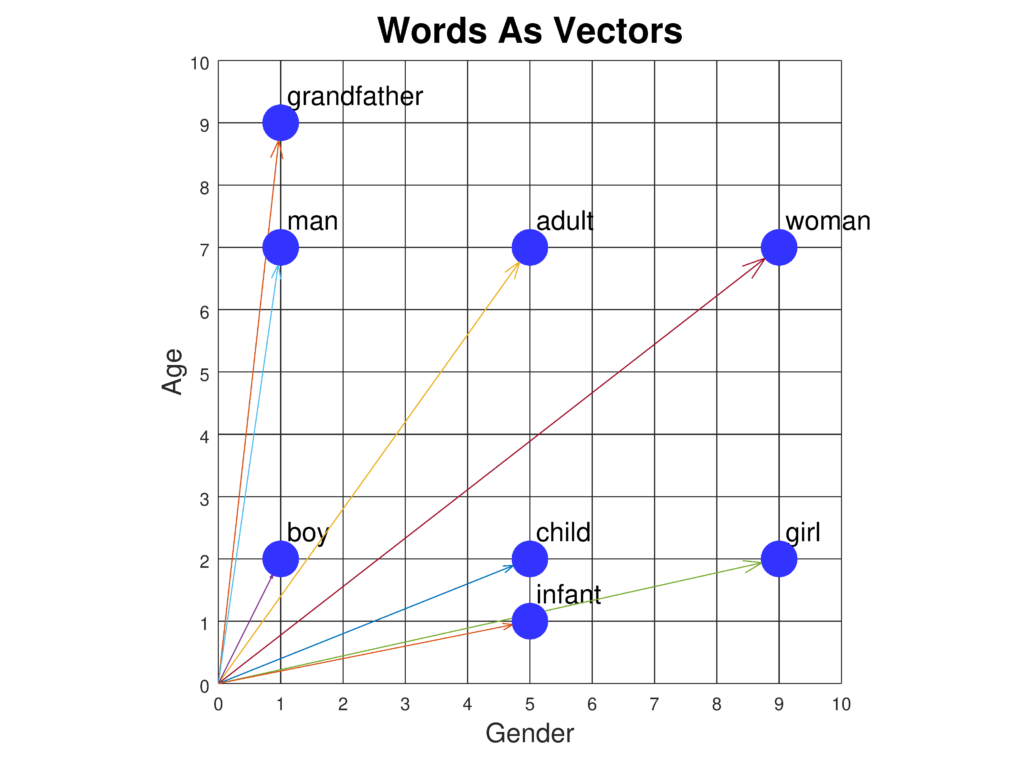

- Semantic Understanding: They enable models to capture the meaning of words and their relationships to one another, allowing for more nuanced understanding of language. For instance, embeddings can capture analogies such as “king is to queen as man is to woman.”

- Dimensionality Reduction: Representing words in a dense, lower-dimensional space reduces the computational burden and improves the efficiency of processing large vocabularies.

- Transfer Learning: Pre-trained embeddings can be utilized across different NLP tasks, reducing the need for extensive task-specific data and computational resources.

- Handling Large Vocabularies: They efficiently manage vast vocabularies and handle rare words more effectively, enhancing model performance on diverse datasets.

Key Concepts and Techniques

- Vector Representations: Words are transformed into vectors in a high-dimensional space. The proximity and directionality among these vectors indicate the semantic similarity and relationships between words.

- Semantic Meaning: Embeddings encapsulate the semantic essence of words, enabling models to perform sentiment analysis, entity recognition, and machine translation with greater accuracy.

- Dimensionality Reduction: By condensing high-dimensional data into more manageable formats, embeddings enhance the computational efficiency of NLP models.

- Neural Networks: Many embeddings are generated using neural networks, exemplified by models like Word2Vec and GloVe, which learn from extensive text corpora.

Common Word Embedding Techniques

- Word2Vec: Developed by Google, this technique uses models such as Continuous Bag of Words (CBOW) and Skip-gram to predict a word based on its context or vice versa.

- GloVe (Global Vectors for Word Representation): Utilizes global word co-occurrence statistics to derive embeddings, emphasizing semantic relationships through matrix factorization.

- FastText: Enhances Word2Vec by incorporating subword (character n-gram) information, enabling better handling of rare and out-of-vocabulary words.

- TF-IDF (Term Frequency-Inverse Document Frequency): A frequency-based method that emphasizes significant words in a document relative to a corpus, albeit lacking the semantic depth of neural embeddings.

Use Cases in NLP

- Text Classification: Embeddings improve text classification by providing rich semantic representations, enhancing the accuracy of models in tasks like sentiment analysis and spam detection.

- Machine Translation: Facilitate cross-lingual translation by capturing semantic relationships, essential for systems like Google Translate.

- Named Entity Recognition (NER): Aid in identifying and classifying entities such as names, organizations, and locations by understanding context and semantics.

- Information Retrieval and Search: Improve search engines by capturing semantic relationships, enabling more relevant and context-aware results.

- Question Answering Systems: Enhance understanding of queries and context, leading to more accurate and relevant responses.

Challenges and Limitations

- Polysemy: Classic embeddings struggle with words that have multiple meanings. Contextual embeddings like BERT aim to resolve this by providing different vectors based on context.

- Bias in Training Data: Embeddings can perpetuate biases present in the training data, impacting fairness and accuracy in applications.

- Scalability: Training embeddings on large corpora demands substantial computational resources, though techniques like subword embeddings and dimensionality reduction can alleviate this.

Advanced Models and Developments

- BERT (Bidirectional Encoder Representations from Transformers): A transformer-based model that generates contextual word embeddings by considering the entire sentence context, offering superior performance on numerous NLP tasks.

- GPT (Generative Pre-trained Transformer): Focuses on producing coherent and contextually relevant text, employing embeddings to comprehend and generate human-like text.

Research on Word Embeddings in NLP

- Learning Word Sense Embeddings from Word Sense Definitions

Qi Li, Tianshi Li, Baobao Chang (2016) propose a method to address the challenge of polysemous and homonymous words in word embeddings by creating one embedding per word sense using word sense definitions. Their approach leverages corpus-based training to achieve high-quality word sense embeddings. The experimental results show improvements in word similarity and word sense disambiguation tasks. The study demonstrates the potential of word sense embeddings in enhancing NLP applications. Read more - Neural-based Noise Filtering from Word Embeddings

Kim Anh Nguyen, Sabine Schulte im Walde, Ngoc Thang Vu (2016) introduce two models for improving word embeddings through noise filtering. They identify unnecessary information within traditional embeddings and propose unsupervised learning techniques to create word denoising embeddings. These models use a deep feed-forward neural network to enhance salient information while minimizing noise. Their results indicate superior performance of the denoising embeddings on benchmark tasks. Read more - A Survey On Neural Word Embeddings

Erhan Sezerer, Selma Tekir (2021) provide a comprehensive review of neural word embeddings, tracing their evolution and impact on NLP. The survey covers foundational theories and explores various types of embeddings, such as sense, morpheme, and contextual embeddings. The paper also discusses benchmark datasets and performance evaluations, highlighting the transformative effect of neural embeddings on NLP tasks. Read more - Improving Interpretability via Explicit Word Interaction Graph Layer

Arshdeep Sekhon, Hanjie Chen, Aman Shrivastava, Zhe Wang, Yangfeng Ji, Yanjun Qi (2023) focus on enhancing model interpretability in NLP through WIGRAPH, a neural network layer that builds a global interaction graph between words. This layer can be integrated into any NLP text classifier, improving both interpretability and prediction performance. The study underscores the importance of word interactions in understanding model decisions. Read more - Word Embeddings for Banking Industry

Avnish Patel (2023) explores the application of word embeddings in the banking sector, highlighting their role in tasks such as sentiment analysis and text classification. The study examines the use of both static word embeddings (e.g., Word2Vec, GloVe) and contextual models, emphasizing their impact on industry-specific NLP tasks. Read more

Translator with URLsLab integration

Get precise AI translations with URLsLab, preserving HTML structure and formatting. Effortlessly translate web content while keeping URLs and emails intact!